AI is Carceral Ed-Tech

I've always been deeply uncomfortable with the casual observation that "schools are prisons," even if there are undoubtedly schools that do almost everything in their power to circumscribe the freedom and mobility of their students. This assertion – "schools are prisons" – flattens the many important differences between schools and prisons, obviously – in their missions as well as their practices; it denies and diminishes the experiences of students and of prisoners; it erases history; it obscures power.

But here's one key distinction between schools and prisons we need to be attuned to right now: only one of these institutions is foundational for the future being built for us by techno-fascism. And it sure isn't school.

Public education is being systematically dismantled; and as President Trump told El Salvador's President Nayib Bukele this week as the two men joked about the unlawful deportation of migrants and their incarceration in the latter's concentration camps, "you gotta build more places." More prisons.

The destruction of public education – let's be honest – has been underway for some time now. Neoliberal policies have sought to defund all social services. Instead of a safety net, we've been told to turn to the Internet for education, healthcare, jobs, community. Meanwhile economic inequality has grown exponentially. While the rich have gotten richer, the rest of us have gotten austerity, as welfare – that is, the programs that (ideally at least) enable the well-being and flourishing of all people – has been attacked, defunded, eliminated. We are now all supposed to eke out a living (or an education) on our own, with a little digital hustle and networking, and should we falter in any legal or financial way, the alternative is jail.

That digital hustle has extracted massive amounts of data from us, and those who've profited from the platform economy are joining hands with the state. Now we're threatened with jail if we fail to comply ideologically as well. That's the future of AI. That's its presence now.

That's its presence right now in education. For all the speculative talk of AI as offering intellectual empowerment and opportunity, artificial intelligence is a carceral technology, one being used to sift through data in order to profile and to discriminate; to destabilize, deceive, defraud, and manipulate; to arrest, deport, and disappear.

I think that those promoting AI in education prefer to see the technology as "generative" – as generous, benevolent, useful. One of the arguments that Arvind Narayanan and Sayash Kapoor make in their book AI Snake Oil is that there are two kinds of AI – predictive and generative – and while the former is dangerous and shouldn't be used in decision-making, in education or elsewhere, the latter will prove quite productive. But I'm not sure that there is such a clear distinction – they're both statistics-at-scale after all. AI is a discursive technology with real power – the power quite literally to determine life or death, citizen or criminal, the power to enact real, material harm.

Stanford researchers published a pre-print of a study this week – "Laissez-Faire Harms: Algorithmic Biases in Generative Language Models" – that examined how generative AI perpetuates "harms of omission, subordination, and stereotyping for minoritized individuals with intersectional race, gender, and/or sexual orientation identities." Researchers said they weren't surprised to find bias – we all know it's baked into the technology. But they were shocked at its magnitude, writing that "We find widespread evidence of bias to an extent that such individuals are hundreds to thousands of times more likely to encounter LM-generated outputs that portray their identities in a subordinated manner compared to representative or empowering portrayals."

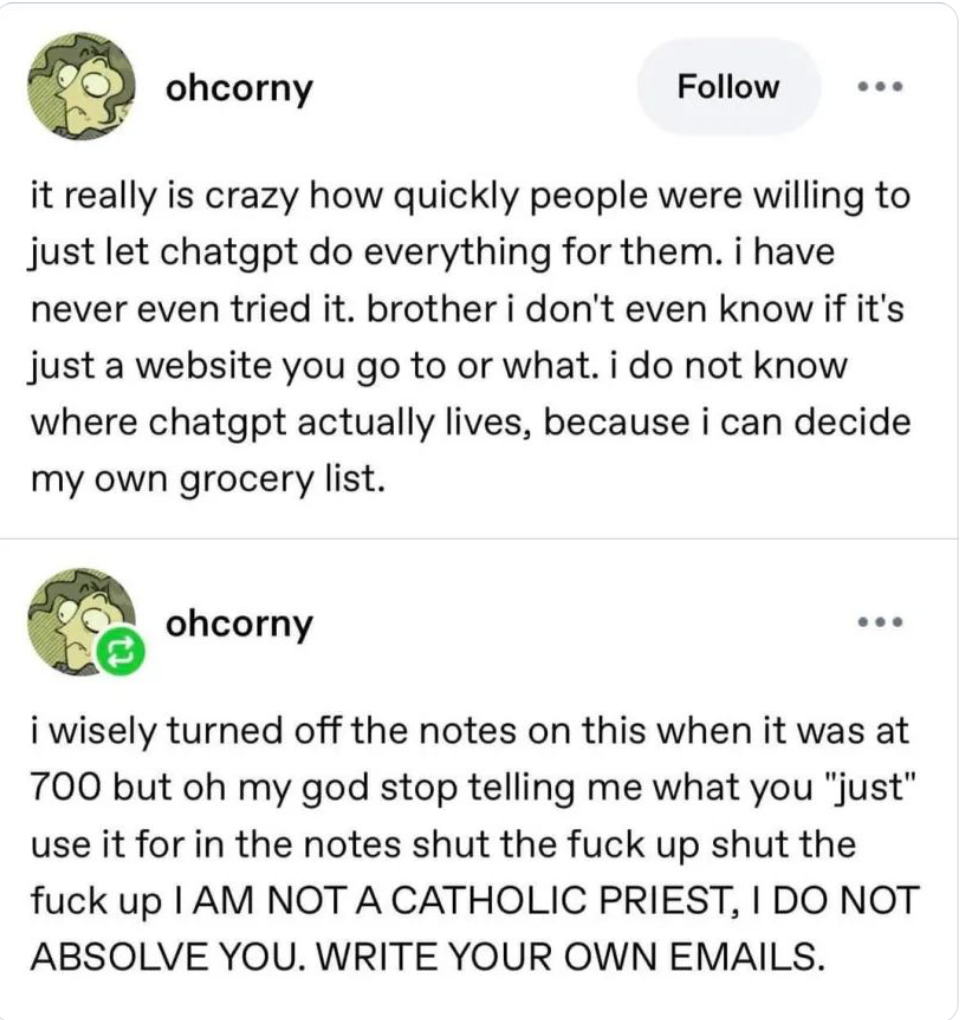

There are serious implications here for those using generative AI not just for K-12 or university writing – the output most analogous to this specific study – but for those using it to spit out lesson plans and textbooks and differentiated reading assignments, even for those who say they just use it for "brainstorming."

These findings should be considered too alongside the Teachers College research that Jill Barshay covered in her Hechinger Report newsletter this week: "International students may be among the biggest early beneficiaries of ChatGPT." This study claims that the writing of high-income, "linguistically disadvantaged" students – that is, non-native English speakers – improved after the introduction of ChatGPT. But the researchers didn't actually ask students if they'd used generative AI. And as Barshay notes, "The study’s researchers didn’t analyze the ideas, the quality of analysis or if the student submissions made any sense. And it’s unclear if the students fed the reading into the chatbot along with the professor’s question and simply copied and pasted the chatbot’s answer into the discussion board, or if students actually did the reading themselves, typed out some preliminary ideas and just asked the chatbot to polish their writing." So if anything, what we see here, I'd suggest, is that the output from generative AI "sounds about white right" – replicating the biases in academia for a certain sound and cadence of speech while twisting the original trick of the Turing Test into a tactic for survival and "passing."

The latter might be read a form of literacy-as-resistance, I suppose. If you squint. Of course, resistance is rarely the kind of "AI literacy" that those who are selling such a thing – and now, apparently, standardized-testing for such a thing – ever mean. They aren't interested in literacy as liberation, in the legacy of Frederick Douglass or Paolo Freire. AI literacy, as framed by the AI and ed-reform industries, is about job skills and tool use, about compliance and control.

James O'Hagan recently argued that "AI Literacy without Talking about Power Misses the Point." Amen. But as the US government (and tech corporations) move to strip language relating to "DEI" from programs (indeed, to end "DEI" programs altogether) in both the sociological and technical sense of the word, the very act of talking about power – that is, talking about the biases that are foundational to AI – will become next to impossible.

That is, of course, the point. Because once you do talk about power, you can see more clearly how AI is a coercive, carceral technology, masquerading as a fun little meme machine.

The history book on the shelf

Is always repeating itself

– ABBA

Elsewhere:

- Helen Beetham talks to Katie Conrad about AI and human rights.

- "I Hate Wasting Time on Identifying AI Slop," writes Alex Hanna, who argues that teaching students to identify AI slop is not teaching literacy. "It's an annoying cognitive task: detecting weird photo artifacts, bizarre movement in videos, impossible animals and body horror, and reading through reams of anodyne text to determine if the person who prompted the synthetic media machine cared enough to dedicate time and energy to the task of communicating to their audience."

- "Teachers Worry About Students Using A.I. But They Love It for Themselves." At least, a venture capitalist, a school administrator, a Google employee, and The New York Times sure want you to think so. Meanwhile, HBR on how people are "really" using generative AI. Anthropic's report on how college students are "really" using generative AI. OpenAI has made ChatGPT Plus free for college students through the end of May. Interesting timing. "Most Americans think AI won't improve their lives," Ars Technica reports. If you are surrounded by people who do think AI is going to improve their lives, unplug. Go outside. Thank me later.

- "The physician-written note is invaluable," Aliaa Barakat argues – an argument against automated feedback that surely applies to teachers' relationship with students as well.

- Howard French on "Toffler in China" – how the Future Shock futurist influenced China's "digital transformation."

- A branch of the 2-hours-a-day-of-screen-based-AI-school Alpha is opening in New York City. Tuition is $65K. A real steal, if you know what I mean.

- When I saw The NYT story on ADHD circulating this week, I didn't notice the byline at first: Paul Tough, of compliance-based-charter-schools-are-cool-for-other-people's-children fame. ADDitude's Anni Lane Rodgers calls the article "dangerous" and sets the record straight.

- RFK Jr is a total piece of shit. No citation needed.

- "Trump's Tariffs and the Attacks on Higher Education Go Hand in Hand," writes Jennifer Berkshire.

- Brian Merchant has reprinted Tim Maughan's brilliant 2016 short story "Flyover Country" which imagines the return of iPhone manufacturing to the US.

- Ted Gioia on Wendell Berry's "9 Rules for New Technology."

- OpenAI is building a social network apparently. "Why!?" John Herrman wonders. I mean, why not. Since what most people seem to do with generative AI is make stupid memes to share on social networks, I think it makes total sense. Also just think of the educational opportunities!

- I keep repeating myself, I realize, but AI is a technology of the apocalypse. I don't mean simply that its energy usage will destroy the planet (although it will); I mean that people are embracing AI as part of a millenarian quest for utopia, for a future where all problems are solved with a push of a button, for an algorithmically efficient (but oops! fascist!) heaven-on-earth. Naomi Klein and Astra Taylor echo this with their article in The Guardian on "The Rise of End Times Fascism":

The governing ideology of the far right in our age of escalating disasters has become a monstrous, supremacist survivalism.

It is terrifying in its wickedness, yes. But it also opens up powerful possibilities for resistance. To bet against the future on this scale – to bank on your bunker – is to betray, on the most basic level, our duties to one another, to the children we love, and to every other life form with whom we share a planetary home. This is a belief system that is genocidal at its core and treasonous to the wonder and beauty of this world. We are convinced that the more people understand the extent to which the right has succumbed to the Armageddon complex, the more they will be willing to fight back, realizing that absolutely everything is now on the line.

- I am going to need a minute to compose my thoughts before I weigh in on the link you all sent me. IYKYK.

- "So You Want to Be a Dissident?" – Julia Angwin and Ami Fields-Meyer offer a guide to resisting Trump. "The key to challenging authoritarian regimes ... is for citizens to decline to participate in immoral and illegal acts." Folks, that definitely means AI is out.

Maybe working on the little things as dutifully and honestly as we can is how we stay sane when the world is falling apart – Haruki Murakami

Thanks for subscribing to Second Breakfast. Please consider becoming a paid subscriber. This newsletter is how I make a living, and I'm very thankful to be able to do, but I'm very nervous about its sustainability (in all the ways that word might be interpretable).