AI Unleashed

One of the things I told myself when I decided to return to writing about education technology was that, thanks to therapy (and, no doubt, to leaving social media), I’d learned how to better manage my emotions around the onslaught of very-bad-news. This week has been a real test. And I’d say I’m mostly managing by avoiding the news — or as much news as I can and still do my job (which includes, every Friday, writing you an email detailing some of the week’s AI and education news).

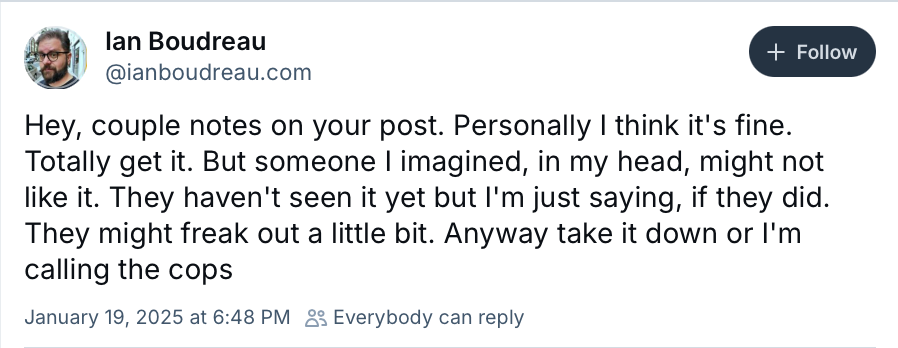

During the last Trump Presidency, I recognized how toxic "the news" could be – that is, in fact, when I left Twitter. As Ryan Broderick argued in a Garbage Day email on Wednesday, part of the Steve Bannon (and thus, the Trump) playbook has always been to “flood the zone with shit” — a flurry of distraction and manipulation that may or may not reflect the new administration’s actual plans or capabilities (legally, practically, or otherwise) but that is absolutely designed to have us all swirling in “a perpetual motion machine of bull shit. Full stack Trumpism.” A psychological denial-of-service attack where we are unable to function.

Cultivating this feeling of destabilizing powerlessness is intentional.

I don’t have any really good advice for you, other than to take care of yourself. Think about who you follow and what you read. You don’t have to weigh in on every terror or tragedy — honestly, screw people who suggest that if you don’t, you don’t care. Performative meme-sharing isn’t the only form of revolutionary praxis. Really. I promise. The future of the planet does not depend on your clicks. We’ve got a long four years (at least) ahead of us.

We can have our eyes wide open, and we can bow our heads, close our eyes, and breathe.

I’m preparing a presentation I’ll be giving in a couple of weeks to a group of NYC principals, and so I'm rereading some of my old talks, trying to recall what it's like to stand up in front of a crowd of people and be smart and engaging. And funny thing: these old talks are full of references to AI.

I mean, of course they are. I'm repeating myself, as it was only a week or so ago that I invoked Rip Van Winkle to remind you: the portrait above the pub door used to be King George and now, upon waking from a lengthy slumber, it’s President George — but at the end of the day, what’s the difference?! It's still George.

A decade ago, folks were giddily predicting that Knewton's "mind-reading robo-tutors in the sky" and robot-essay graders and IBM Watson and MOOCs-as-self-driving-universities were poised to change education forever. Hell, for seventy-five years now, we've been told that artificial intelligence is almost just about to utterly transform teaching and learning. Soon. Someday. And now, now, it’s for real. But I'm flipping the pages of the calendar backwards and forwards and thinking it sure seems, based on this long history, that we have time to move thoughtfully – to consider, to be considerate — rather than act as though we don’t have any time (or any inclination even) to think at all.

Again, do note this feeling of destabilizing powerlessness that many AI proponents seek to conjure: this story that the world is changing more rapidly than ever before, that this technological change is unprecedented and unavoidable, that you must adopt AI now or you’ll be left behind, that their vision of the future is the only one that offers certainty, security, and safety.

Everything that needs to be said has already been said. But since no one was listening, everything must be said again. — Andre Gide

In the final days of the Biden Administration, the FTC finalized updates to COPPA, but there’s no comfort, I don’t think, in any protection purportedly offered here.

In the first moments of the Trump Administration, the White House issued an executive order rescinding the 2023 guidance on AI. (Apparently, some of the Week One announcements are AI-generated executive orders — exemplary automated thoughtlessness.) The new administration has scrubbed the content of the old White House website, including this: "The Cumulative Costs of Gun Violence on Students and Schools."

Trump has cleared the way for immigration arrests at schools by rescinding the "sensitive areas" policy that had, for decades, prevented this violence, Chalkbeat reports. Everyone in education – teachers, administrators, students, parents – needs to know their rights: rights to physical safety, no doubt, but also to the security and privacy of data. Education technology, particularly with its embrace of surveillance, predictive policing, and algorithmic decision-making, can put people in grave, grave danger.

I worry that glee about generative* AI provides cover for some serious human-rights abuses that are built right into this whole technological project.

Record scratch… the British government calls their AI plan "Blitzscaling"?!?!? (Oh. I guess that's a common word in techno-business speak? Totally not an homage to the Nazi military, I'm sure.)

As always, Ben Williamson is the one to read here, about AI in the UK and beyond: "Piloting Turbocharged Fast AI Policy Experiments in Education."

At least this gave me a good laugh:

“40 years ago, back in 1985, when Madonna was getting into the groove and Bett had only just begun, no one could have predicted the edtech of 2025. And there’s no way to know what the next 40 years will bring for AI and beyond” – Bridget Phillipson, the British Education Secretary at Bett

You know what was published 40 years ago (okay in 1986, not 1985)? Benjamin Bloom's "2-Sigma Problem," a paper that many ed-tech and education reform folks still love to cite, even though its findings on the incredible effect of one-on-one tutoring have not been replicated.

Whoever could have predicted that people would still be obsessed with intelligent tutoring systems and chatbots?! (Pretty much everybody?)

"Scientists Have Resurrected ELIZA, the World’s First Chatbot," Gizmodo reports. Nice verb choice in the headline there, as it's a very Frankenstein move, no doubt. Elsewhere, an attempt to use AI to "build Marcus Aurelius’ reincarnation." But if you think Roman Empire bots are bad, check out the "schools using AI emulation of Anne Frank that urges kids not to blame anyone for Holocaust."

"AI isn’t very good at history, new paper finds" – so says Techcrunch, which honestly tends not to be either. To the contrary, insists USC‘s Benjamin Breen, who claims “The leading AI models are now very good historians.” Well then! Lots of growth potential in the field too, as apparently "The Business of History Is Booming," according to Bloomberg. History books and history podcasts are popular, that is to say, neither of which already require actual, working historians.

Historian Dan Cohen cites a recent study on the use of generative AI in a high school math to explore "the unresolved tension between AI and learning." Math education researcher Dan Meyer reviews two more recent studies on the use of AI in the classroom. There’s a flood of academic papers on AI posted to LinkedIn every single day — all that activity probably the reason why Microsoft’s energy usage is way up. (It’s definitely not AI causing massive electricity and water consumption, I’ve been repeatedly informed.)

A friendly reminder that a meta-analysis of one hundred years of research on ed-tech looks something like this: some students showed some improvement on a standardized test in a specific subject area, after using ed-tech in a class taught by a supportive educator well-trained in that subject area and in the technology in question.

Speaking of doing well on standardized tests: "Coming Soon; PhD-Level AI super-agents," Axios informs us. I'm considering going back to grad school to finish my PhD, incidentally, having been ABD for over 20 years now. So I gotta say: if you have a task you really want to get done in a timely manner, you might not want to get a PhD-level agent to do it. There is that beautiful moment, however, when a first semester college freshman has so much academic enthusiasm: build that super-agent, maybe?

The TikTok stuff both is and is not intertwined with education technology, so I’m both paying attention and not. Folks who are: Taylor Lorenz on TikTok and "The Great Creator Reset." I linked to Tressie McMillan Cottom's recent op-ed in Monday's newsletter – "The TikTok Economy is All Americans Have Left" – because I do think that there's something important going on with "the youths" – that is, anyone under 50, by my calculations – and their belief in social media-based entrepreneurialism.

Public institutions have been systematically defunded and dismantled over the past forty or fifty years, and we’ve been told — explicitly and implicitly — to turn to the Internet instead.

So now what?

Edward Ongweso Jr reports on the "AI delusions" at CES, with thoughts on Las Vegas as "a laboratory for surveillance and social control" – a laboratory whose technology is based on behavioral engineering. And considering this remains the basis for much of ed-tech – despite all the talk of some "cognitive turn" – it's probably worth thinking about the bells and whistles already governing the classroom, well before the tech industry rebrands everything as "AI-enabled" and then further obscures accountability.

In other BF Skinner-related storytelling: Shannon Vallor argues in The AI Mirror that the first robot was a steam-powered pigeon.

“It matters what matters we use to think other matters with; it matters what stories we tell to tell other stories with; it matters what knots knot knots, what thoughts think thoughts, what descriptions describe descriptions, what ties tie ties. It matters what stories make worlds, what worlds make stories.” — Donna Haraway, Staying with the Trouble

In the last few weeks, I’ve noticed a growing refrain, one where "AI in education" is framed in simplistic, binary terms: very much a "you’re either with us or against us" sort of thing. At best, it's pretty reductive. But at this particular moment politically, it's awfully frightening, particularly as the technology oligarchs flex their muscle, with clear ambitions for violence and vengeance.

This blog post from Maha Bali demonstrates the range and the complexity of the reasons why people refuse AI. She lists "Different Critiques of AI in Education," noting that "the nuanced differences between them is important, because I think this means sometimes we agree to resist AI but our reasons come from different perspectives, such that if one small thing changes, it makes a huge difference to us, and one of us may changed their minds… while others won’t."

A clear articulation of values is, I strongly believe, going to be crucial moving forward, particularly as the Trump Administration and the AI / technology industry demand schools and educators (and students and communities) act in ways that violate their fundamental principles.

Thanks for reading Second Breakfast. Please consider becoming a paid subscriber. Your financial support enables me to continue this work: reading and writing and learning about AI without AI.

*Maybe it’s not “generative” as much as replicative. It's predictive AI with a better marketing budget. Admittedly, I just like the word “generative” a lot, and don’t like it being co-opted by industry, techno-fascism, or necropolitics.