Automated Contempt

The political-technological crisis is only deepening in the United States, and I hardly know where to begin today's email to you, other than to say that I hope you're okay. And if you're not, do feel free to hit "reply" – I can't promise anything other than a very human ear that listens, a very human heart that cares, and very human hands that will reply (at some point. I promise).

It's not much, granted, but holy shit, it's better than the illusion offered by chatbot — the predictive-model of yesterday’s speeches regurgitated as the performance of care.

We cannot outsource thinking and compassion to a hierarchy-generating machine and expect the world to be anything other than automated emptiness and exploitation.

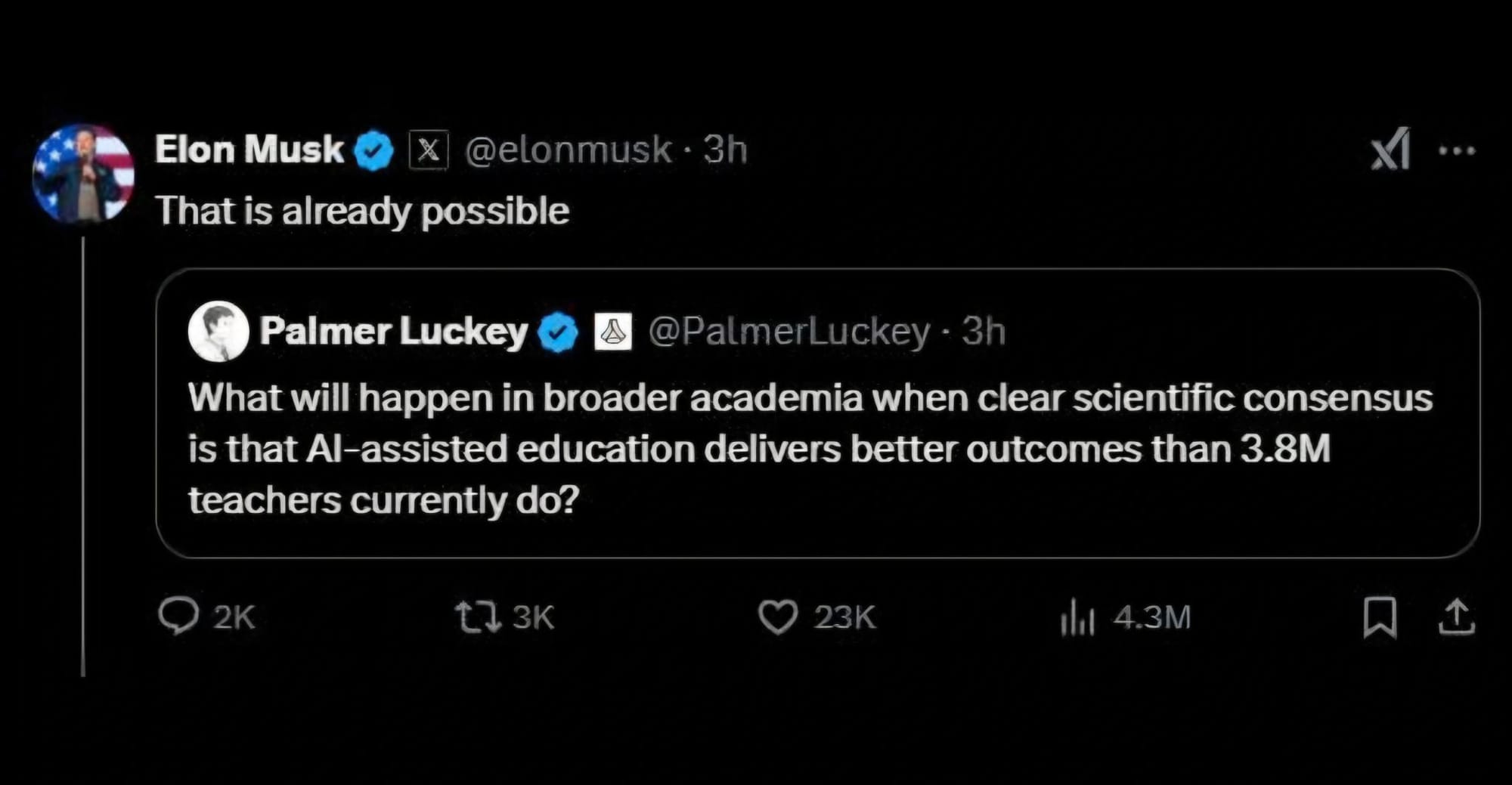

But ah, the glee from those who want our lives to be flattened:

As I have tried to make clear in my work, technologists and entrepreneurs have spent the last century devising ways to replace teachers with machines – seriously, a century. Sidney Pressey exhibited the first teaching machine in 1924. One hundred years of trying to replace teachers with machines. Men like Pressey have invoked "science" and "innovation" all along the way, claiming that automation will make learning better, faster, cheaper, more scalable, more "personalized."

But to be clear, the "better outcomes" that Silicon Valley shit-posters Palmer Luckey and Elon Musk fantasize about in the image above do not involve the quality of education – of learning or teaching or schooling. (You’re not fooled that they do, right?) They aren't talking about improved test scores or stronger college admissions or nicer job prospects for graduates or well-compensated teachers or happier, healthier kids or any such metric. Rather, this is a call for AI to facilitate the destruction of the teaching profession, one that is, at the K-12 level comprised predominantly of women (and, in the US, is the largest union) and at the university level – in their imaginations, at least – is comprised predominantly of "woke."

This week, the Trump Administration continued to fire thousands of federal employees, to purge government data, to slash millions of dollars in federal funding, and to threaten even more financial penalties if certain programs and policies, particularly those related to civil rights, are not terminated. Either directly or indirectly, every school at every educational level has been impacted. Schools are reeling. People are reeling.

"The cruelty is the point," as Adam Serwer wrote back in 2018 early in the first Trump Presidency. Indeed, Russell Voight, architect of Project 2025 and now head of the Office of Management and Budget has declared, "we want the bureaucrats to be traumatically affected... When they wake up in the morning, we want them to not want to go to work, because they are increasingly viewed as the villains. We want their funding to be shut down … We want to put them in trauma."

These explicit acts of cruelty and trauma – efforts to harm people whose careers are in public service (among the many, many others who are targeted by this administration, particularly trans and immigrant children) – make me really bristle with almost any talk of AI in education right now, particularly any talk that seems to delight in the possibility espoused by Musk and Luckey and so many others in and adjacent to Silicon Valley: zero-cost course development and teacher-free courses and the like.

That has long been the dream of education technology (and perhaps of mass education more broadly), but despite one hundred years of trying, this teacher-free fantasy has never been realized because, as EduGeek Journal points out in a response to some of this week's speculative AI chatter, it gets so very much wrong about the work of teaching and learning at almost every step. About what it means to be, to become human. It gets so much wrong pedagogically. It gets so much wrong morally. And at this particular moment in capital H History in which we’re all unfortunately trapped, this utter disdain for the labor and expertise of instructors and instructional staff feels particularly gross. Dangerous, even.

AI, Dan McQuillan argues, is "a political technology in its material existence and in its effects. … the consequences are politically reactionary. The net effect of applied AI... is to amplify existing inequalities and injustices, deepening existing divisions on the way to full-on algorithmic authoritarianism." Perhaps you disagree – perhaps you look at the technology (and its investors, its spokespeople) and see a something a little bit liberatory for yourself. Perhaps you've found a nifty trick — a timesaver, a shortcut, a hack. (Note how hard it is to talk about AI in terms other than labor efficiency — which is, let’s not forget, the language of management and control.) Perhaps you think that, if you and yours are sufficiently “upskilled” (and/or sufficiently obsequious to Trump policies or to the new AI lords), it'll all turn out okay.

Listen, it’s not that there isn’t some really interesting AI research being done. (See: Melanie Mitchell on whether or not LLMs have "world models," parts 1 and 2, for example.) And I am trying to heed something that Maha Bali recently wrote, urging us not to engage in AI shaming. (Or, at least I’m thinking about it.)

But good grief, to all those cooing about education's coming automation as we watch public institutions be looted and civil rights reversed, as people in your field and in your neighborhood are being intentionally hurt and traumatized, all as part of a plan to AI the tech oligarchs into a new Age of Empire and the rest of us into submission: “Read the fucking room.”

“I am utterly disgusted. If you really want to make creepy stuff, you can go ahead and do it, but I would never wish to incorporate this technology into my work at all. I strongly feel that this is an insult to life itself.” — Hayao Miyazaki on AI

Last month, the Vatican released a doctrinal note on artificial intelligence, "Antiqua et nova" (Old and new). It’s very long and gloriously footnoted. You should read it, even if you're not Catholic (or heck, even Christian), even if you can’t imagine the Pope et al has anything to say about technology. (Although, to be fair, the Church has survived several “information revolutions,” so perhaps there is some wisdom in there somewhere.)

As Paul Ford writes in his response, "God Gets Involved," the document considers much more than the narrow focus of, oh say, can AI replace teachers and instructional designers (although it does discuss that too). It tackles the biiiig question: Can AI replace God?

AI may prove even more seductive than traditional idols for, unlike idols that ‘have mouths but do not speak; eyes, but do not see; ears, but do not hear’ (Ps. 115:5-6), AI can ‘speak,’ or at least gives the illusion of doing so (cf. Rev. 13:15). Yet, it is vital to remember that AI is but a pale reflection of humanity—it is crafted by human minds, trained on human-generated material, responsive to human input, and sustained through human labor. AI cannot possess many of the capabilities specific to human life, and it is also fallible. By turning to AI as a perceived ‘Other’ greater than itself, with which to share existence and responsibilities, humanity risks creating a substitute for God. However, it is not AI that is ultimately deified and worshipped, but humanity itself—which, in this way, becomes enslaved to its own work.

(I really want to write more about this document, and not just because there's a whole section on AI and education. But today is not that day. Poppy is in the hospital, recovering from surgery. She ate a tennis ball – the x-ray confirmed it. I can’t even come up with a robots-replacing-radiologists punchline, I’m so emotionally exhausted from everything right now.)

A few, much shorter things to read: well, slightly shorter, of course, and with a lot more hellfire and brimstone than Pope Francis ever musters is dear Ed Zitron on "The Generative AI Con." Emily Pitts Donahoe with a rant "Against 'Efficiency.'" Rob Nelson "On Confabulation." (I prefer "confabulation" to "hallucination" to describe AI's errors, for sure. But even more, I like the word "bullshit," particularly as it ties to Cory Doctorow's notion of "enshittification" – in this case, not just of making software worse bit enshittifying entire systems.) Amanda Perry argues that "You Can’t Solve the Teacher Shortage by Pretending Anyone Can Do the Job." Anyone, anything – including computer software.

Kin told me the other day about a post he saw on LinkedIn: some guy’s story about how his hometown had two barbers: one advertised $7 haircuts. The other advertised that he fixed $7 haircuts.

It’s a cute albeit obvious analogy for AI, and I think it works for the other tasks ahead of us too, for the regrowing and rebuilding we will have to do after all this MAGA/Musk destruction, technological and otherwise.

But I’d like to offer a little tweak to this “just so story.” I propose we replace the second barber with a hairstylist — that is, with a woman. (Sadly it means we've probably got to increase the cost of the haircut by a lot.) Because frankly, it will be women who have to fix this mess. It always is. And it'll be the women who men don't see – the ones who have always provided the invisible, unthanked, unrecognized, un- and underpaid reproductive labor that keeps this world clean and clothed and fed and taught.

Thanks for reading Second Breakfast. Please consider becoming a paid subscriber. I know that journalism – education, technology, and otherwise – is in a bad place at the moment, and I'm just one of many writers trying to piece together a living without any institutional backing and hoping you'll pay to read my words. I deeply appreciate the support. This Friday newsletter is always freely available – and usually chock-a-block with the lovely AI and ed-tech news from the week. You can blame or thank Poppy that there are fewer links in today’s missive.