Bad Taste, Unfulfilling

I regret to inform you that certain political pundits and education reformers are calling for the return of "high stakes testing." Or at least David Frum talked to former Secretary of Education Margaret Spellings in a recent podcast published by The Atlantic where this dismal idea was (once again, as always) posited as the fix for "the decade-long decline in U.S. student achievement." You know that the elite are fresh out of ideas when they have to wheel out a member of George W. Bush's cabinet to talk about policy proposals they promise (this time, really) will revitalize the country.

That the incessant demands of standardized testing – demands on teachers and students – have contributed to people's growing dissatisfaction with public education is largely waved away in this discussion. Testing – indeed, all of schooling – is clearly something we do to people rather than a space and a practice constructed with, by, and for people.

I'd wager that a return to high-stakes testing would only serve to further undermine our confidence in schools. But perhaps that is the plan.

As I argued in Teaching Machines, it's impossible to separate the history of standardized testing from the history of education technology. Although education psychologists in the early twentieth century designed machines they said could teach, they also built machines that could test – sometimes, as with Sidney Pressey's device, these were one and the same. Standardized testing, of course, had grown in popularity in this period – it was seen as one of the key ways to measure and rank student aptitude and intelligence – particularly after it was used in World War I to evaluate military recruits. But while the Army Alpha and other early standardized tests administered in schools were scored by hand, new machinery promised calculation, computation at scale. It's more objective; it's more scientific; it's more efficient – you can see these claims still echoed in Frum and Spellings's conversation.

It should be no surprise, if we consider the long, intertwined history of testing and technology, that the big surge in school expenditure for one-to-one computing in the 2010s came from legislation and funding that tied tech purchases to testing objectives. Oh sure, folks probably prefer narratives about "networked learning" and "future readiness" and whatever other clichés the PR taught them to parrot; but the impetus for computers in schools has mostly revolved around assessment. That computer usage also means a constant stream of data that can be used to build algorithms that can be sold back to schools as "personalized learning" (then) or "AI" (now) is another boon, but again, one that education psychologists were already fantasizing about in the 1920s.

Back in 2012, there was a flurry of excitement about the use of automated grading algorithms in standardized testing. Perhaps you recall the study, published by University of Akron's Dean of the College of Education Mark Shermis along with Ben Hamner, a data scientist from a company called Kaggle, that claimed that robots and humans graded pretty much the same: "overall, automated essay scoring was capable of producing scores similar to human scores for extended-response writing items with equal performance for both source-based and traditional writing genre."

Or perhaps you don't recall, because a lot of folks now write as though we're facing automated essay grading for the very first time. Honestly, it makes me feel a little crazy when you do that, guys. (Or, at the very least, a little old.)

I realize that much is invested in the story that this moment is the "AI" moment, but I'd urge you to think about the ways in which – in education at least – standardized testing and education technology have worked hand-in-hand to shape thinking, reading, and writing for decades now (derogatory), to reduce students to data points, to see teaching as transmission, and to see grading as a feedback mechanism – everything, everyone reduced to a machine-metaphor.

Who looks at the world and thinks that what schools need – what democracy needs – is more testing and more technology (more compliance, more punishment, more discipline, more surveillance, etc.)?!

Oh.

When a beer brewing competition introduced an "AI"-based judging tool in the middle of a competition, folks were pissed. "There’s so much subjectivity to it, and to strip out all of the humanity from it is a disservice," one beer judge told 404 Media.

I guess the grownups in the craft beer world just don't like accountability, am I right Margaret?

Or maybe those grownups were suspicious that this was a plan to extract data, extract value from the craft brew community so that someone else could profit, so that their labor could be replaced.

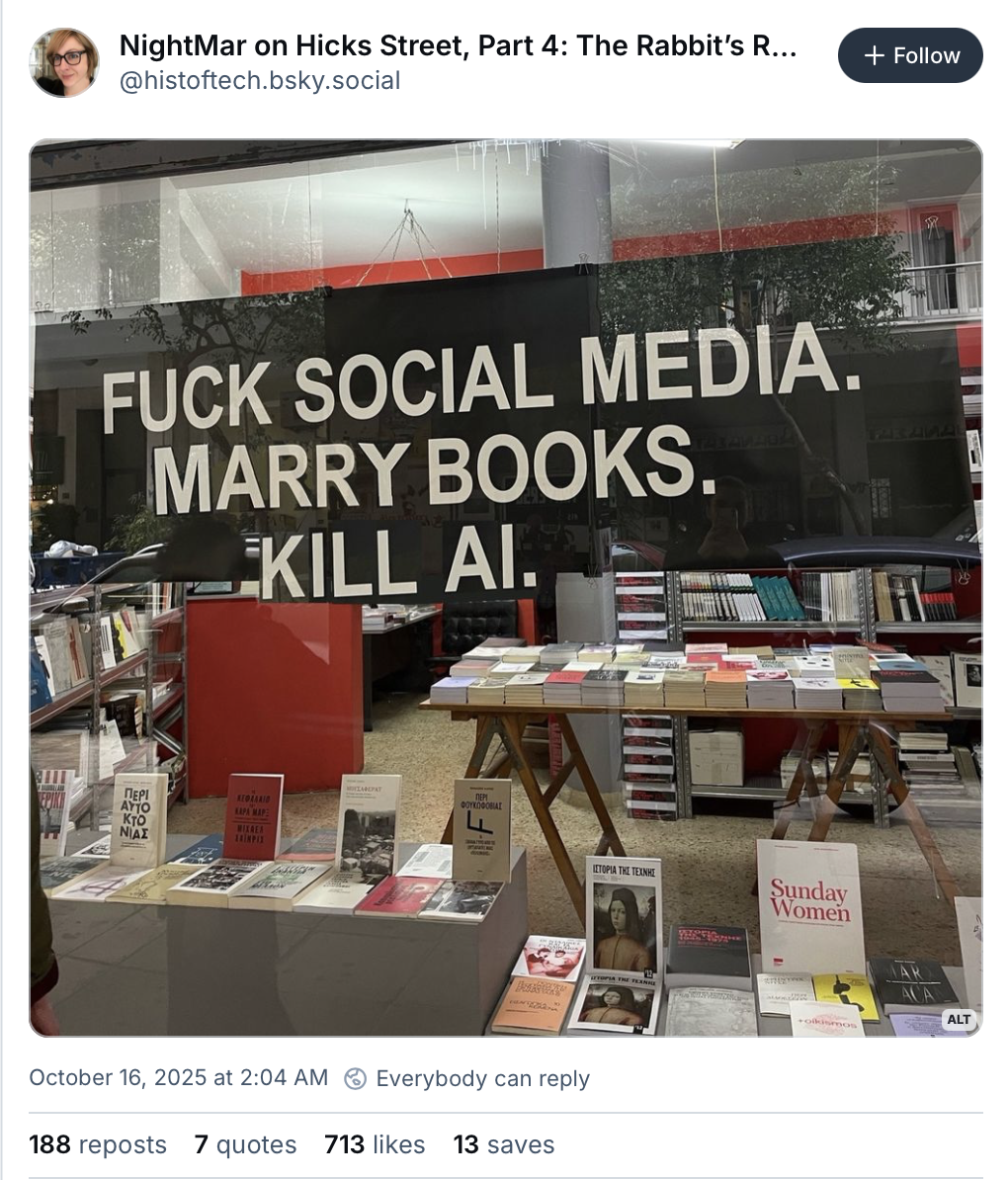

Garbage Day's Ryan Broderick on "The Great Dumbening":

...Why are these guys so fucking stupid?

But their blatant stupidity is why they’re so popular. It’s the uncomfortable truth underpinning pretty much everything that’s happened in pop culture — including politics — since the 2010s social media revolution. The online platforms that created our new world, run on likes and shares and comments and views, reshaped the marketplace of ideas into an attention economy. One that, like a real economy, is full of very popular garbage. And, also like a real economy, is now so vast and important that it’s virtually impossible to change it. If you want access to it, you better get comfortable making lowest-common-denominator bullshit in front of a camera. And, of course, it’s a lot easier to feel good about doing that if you’re an idiot.

A brief aside here, but this is the big bet that AI companies are making right now. That our tastes have grown so rotten and atrophied that we won’t even care when our feeds start filling up with slop.

Although there aren't any official numbers to back this up, a reporter from Business Insider claims that Sora 2, Open AI's new video-slop feed, is "overrun with teenage boys" and that a lot of what's generated and shared is "edgelord humor." This would follow the trajectory of much of computing, much of the Internet, of course – designed for, catering to young men; encouraging violent, racist, misogynist content while insisting it's all harmless good fun. (A recent paper in Gender and Education examines the ways in which the "manosphere" infiltrates classroom technologies – the "affective infrastructure" – as well.)

Some of this makes it hard to not frown at the mindlessness of the latest "6, 7" meme, I suppose. It emerged on TikTok; and it sure seems like an example of "brain rot." Certainly The Wall Street Journal had a good hand-wring over it this week, trying so very hard to address the concern – it's "making life hell for teachers"! – and find some meaning somewhere, anywhere in the numbers.

I would say I'm passionately ambivalent about this sort of thing. I mean, yes, it's dumb. But saying and doing dumb things – and finding this to be peak humor, particularly when adults don't laugh – is one of the great joys of childhood. The folklore of children and teens has always involved learning and testing and subverting boundaries, figuring out and exploring their power, their bodies, their emotions, their sociality.

Technology (and, of course, COVID) has altered some of this, no doubt. It has changed how children play (increasingly "alone together," as Sherry Turkle put it.) It has altered the speed with which rhymes and sayings are spread. I mean, there's already a South Park episode.

In some ways, I worry a lot less about the goings-on in the subculture of children and more about the goings-on in the dominant culture of adults.

And there, there is a larger cultural shift at play – not simply towards "brain rot," but towards "trolling" – the kind of provocations that "6, 7" might gesture towards, but are more fully (more destructively) enacted as in-group/out-group provocations. Trolling is a cultural embrace of harassment. It's an embrace of manipulation. And then, when followed by the dismissive shrug from the troll, you're the asshole for getting upset.

We live in a culture of deception; we live in a culture of fraud. I've written before about the connections between LLMs and MLMs, and Brian Merchant noted something similar this week: "AI profiteering is now indistinguishable from trolling."

The "AI" bubble – it's giving Enron vibes, Dave Karpf rightly observes. It reeks of a kind of radical disregard for truth, for feelings, for other people. And maybe this makes those childhood pranks and jokes feel a lot less benign. But kids aren't the problem here.

More bad news:

- "Campus, on online two-year college backed by Sam Altman and Shaquille O’Neal, has acquired AI learning platform Sizzle AI for an undisclosed amount," Inside Higher Ed reports.

- For a while, the narrative was "schools don't do a good job teaching students how to code." Now these same folks are mad schools are teaching students how to code and discouraging the usage of "AI" tools that promise to do it for them.

- "A hack impacting Discord’s age verification process shows in stark terms the risk of tech companies collecting users’ ID documents. Now the hackers are posting peoples’ IDs and other sensitive information online," says 404 Media.

- "Trump Administration Guts Education Department with More Layoffs," The New York Times reports, noting the 95% reduction in staff working in the Office of Special Education Programs since the beginning of the year.

- "Here's how TikTok keeps you swiping" – an interactive piece from The Washington Post that dovetails nicely with what I wrote about in Wednesday's essay on "addiction."

- Viola Zhou on how "AI is reshaping childhood in China."

- From Fortune: "Perplexity’s 31-year-old CEO horrified after seeing a student using his free AI browser to cheat: ‘Absolutely don’t do this’." Spoiler alert: he's not horrified. Students' questionable practices are what's propping up the growth charts for this immoral industry.

Thanks for reading Second Breakfast. Please consider becoming a paid subscriber, as this is my full-time job – although fair warning: I am taking November and December off from sending emails on a regular basis. (I'm sure I'll still write and send something.) I've got a bunch of talks I'm giving and a book proposal to write. (Hi Susan.)

This week's bird is the plate-billed mountain toucan, which lives in the high-mountain forests of the Andes. Toucans are arguably one of the world's most recognizable birds, due to their large and colorful bills. As such, they've been frequently used in advertising – notably (and related to today's stories) in iconic ads for some fairly middling beer and children's cereal. The plate-billed mountain toucan is considered "near threatened" as its habitat is being lost to deforestation. Good thing "AI" is going to fix climate change for us.