Companion Specious

If you've ridden the NYC subway lately, you've seen the ads for Friend. "Largest subway campaign ever," the founder proudly posted on Instagram.

Friend is (of course) an "AI" startup, another one of these "'AI' companion" services, another one of these new "AI" listening devices you're supposed to carry with you all the time, everywhere. Like all these new "AI" gadgets, the reviews have been universally terrible: "invasive," "manipulative," "condescending," "useless." "I hate my AI friend," Wired's headline read.

So perhaps the 22-year-old founder of Friend thought that dropping over $1 million on advertising would counter this – go direct to the people to make your pitch because surely everyone wants to wear a chatbot as a necklace, right? Surely everyone wants an "AI" friend?

Actually, no. No they don't. Or New Yorkers don't, at least. The ads – which are everywhere – are now everywhere defaced. The largest subway campaign ever has prompted an overwhelming rejection of not just Friend's messaging but the whole "AI" industry's. "AI is not your friend." "This is surveillance." "AI couldn't care if you lived or died."

Much like Trump, "AI" remains wildly unpopular and as such desperate to showcase how much power the technology can nonetheless wield. Most people see through this; most people recognize, as Hagen Blix says in an interview with Brian Merchant, that this is "class warfare through enshittification."

Ta-Nehisi Coates's appearance on The Ezra Klein Show this week was so incredibly revealing – not just about the extent to which Ezra Klein specifically seems willing to throw women and transgender people under the bus in order to gain (re-gain, retain) political power. And I wonder if people who share his mindset (or his politics or more accurately, perhaps, his class affinity) – this whole "abundance" agenda – are able to rationalize the dehumanization of other people, and for my purposes here, rationalize it through an embrace of "AI," perhaps because they have already accepted the frameworks, the ideologies, the beliefs and practices within which fascism and racism (and yeah, capitalism) operate and perhaps because they are freaked-the-fuck-out about uncertainty and believe that the oracle-answer-machine can provide them with predictability, stability, truth.

Tressie McMillan Cottom's NYT op-ed felt like a response, at least in part, to Klein and Coates:

Debate is a luxury of norms and institutional safety, two things that Trump’s second administration has systematically destroyed. So many Americans love the idea that debate solves tough political problems because we love the idea of American exceptionalism. Forget the bloody wars of independence, secession and expansion and remember the epistolaries. We flatter ourselves. There was never a time when rank-and-file Americans perfected the ideas of the Republic without violence or oppression. Debates among founding fathers were games among similarly classed white male property owners. Women, enslaved people, Indigenous nations, disabled people, poor people, some immigrants — they were all excluded from the civic sphere we valorize now as the height of American civility.

If our obsession with debate was merely a romantic delusion, it would be one thing to misapply it to our current political reality. But it is worse than that. An obscene amount of money has turned debate into a weapon, deliberately honed to punish good-faith participation by making us feel like fools for assuming the best of an ideological opponent who only wants to win. Since the days when the conservative scion William F. Buckley Jr. started planning for a conservative resurgence, activist organizations have spent millions of dollars to teach conservative foot soldiers the art of coercive “debate.” Over decades, the right has methodically built institutional safe spaces for conservative thought, poured millions into training young conservatives, legitimized first talk radio and then conservative TV news and then the alt-right blogosphere. This machine built, in some respects, Charlie Kirk, and then he built others like him.

The weaponization of debate is so well known that it is a meme: a guy with a table and a sign that says “Change my mind.” On the day he was killed, Kirk was sitting in a tent with a similar label, “Prove me wrong.” Sounds harmless enough in theory, but performative debate metastasizes. Whether in the form of abortion opponents who show up to “debate” women about why bodily autonomy is a sin or scientific racists who show up to “debate” Black and Indigenous thinkers about the rational arguments of their human depravity, the aim isn’t debate but debasement.You can be forgiven for not knowing this.

Professional intellectuals and political observers cannot be forgiven for pretending not to know this. It is not hidden history.

Cottom's article underscores how hard authoritarian forces – political forces and industry forces – are working to control information. The acquisition of Twitter by Elon Musk. The acquisition of TikTok by Larry Ellison. The control of Paramount by Ellison's son. Again, we should view "AI" – at its core, an algorithmic control of data gathering and distribution – as part of these efforts. "The age of sponsored knowing," as Jeppe Klitgaard Stricker put it.

On the latest episode of his podcast Education Technology Society, Neil Selwyn talks to Brad Robinson about his research on teachers' use of "AI" to generate emails to parents.

MagicSchool, the "AI" platform that Robinson and co-author Kevin Leander examined, offers over 80 different "AI-powered" features designed to automate all sorts of tasks – creating lesson plans, generating assignments, providing feedback, and so on. The company explicitly positions itself as a solution to teacher burnout, Robinson observes – and here we see (as usual) addressing this problem is framed as a technology problem and the responsibility is shifted onto individual teachers rather than, say, schools/society undertaking structural change.

Using MagicSchool, the website tells educators, gives "you more time where it matters most – with your students." And one might ask, considering the large number of tasks that the company says it can automate, "what's left?" What exactly it thinks teachers do with all that extra time. (At what point, is the teacher replaced by an algorithm altogether?) But just as importantly, as Robinson and Leander found in their research, the outsourcing of these so-called bureaucratic tasks to automation might diminish relationships. Those who push for the automation of work – "AI" builders, promoters, and importantly, school administrators – do not recognize this because they do not understand (or value) what constitutes care work. The tasks that "AI" says it can do are actually important work, not "bullshit jobs" – affective labor to be sure, but also pedagogical work; and these tasks – carefully crafting messages to send home, for example – shape how teachers develop a learning community in and beyond the classroom walls.

Do teachers use these tools because they really believe "AI" can foster authentic, meaningful relationships with families? Or is it because they're so swamped that communication gets pushed down the To Do list and MagicSchool promises "magic"? Robinson asks.

Teachers, he argues, are being forced to make difficult decisions and adopt "AI" because of their working conditions. This should be a union issue. But of course the teachers unions in the US have seemingly aligned themselves with the "AI" industry.

Such a very different response from SAG-AFTRA this week to an "AI" generated "actor" Tilly Norwood.

“To be clear, ‘Tilly Norwood’ is not an actor, it’s a character generated by a computer program that was trained on the work of countless professional performers — without permission or compensation,” the union said in a statement. “It has no life experience to draw from, no emotion and, from what we’ve seen, audiences aren’t interested in watching computer-generated content untethered from the human experience. It doesn’t solve any ‘problem’ — it creates the problem of using stolen performances to put actors out of work, jeopardizing performer livelihoods and devaluing human artistry.”

Meanwhile, in education circles, we get crap like this: "Goblins AI Math Tutoring App Clones Your Teacher’s Looks and Voice."

- "AI won't save higher education. It will further divide it," write Richard Watermeyer, Donna Lanclos, and Lawrie Phipps.

- "Should college get harder?" asks Joshua Rothman.

- "School of Grok" by Ian Krietzberg

- "Sometimes We Resist AI for Good Reasons" by Kevin Gannon

I would be remiss if I didn't make note of the Chapter 11 news: Anthology, the parent company of Blackboard, has declared bankruptcy.

It is easier to imagine the end of the world than the end of the learning management system

Phil Hill has a look at the Chapter 11 filing "by the numbers," and while I am more than happy to leave the analysis of the LMS industry in his capable hands, I will add a reading recommendation: Megan Greenwell's Bad Company: Private Equity and the Death of the American Dream. Her book does not look at any education or ed-tech companies acquired by private equity – Blackboard, PowerSchool, Instructure, for starters – but it does showcase how the industry operates. (Spoiler alert: shady AF.) It isn't simply that companies acquired by PE are more likely to go bankrupt (putting people out of work, putting public pensions at risk). It's that private equity firms profit no matter what: the companies they take over make money, private equity makes money; the companies they take over lose money, private equity still makes money.

I was reminded this week – hahahahahahaha, as if I'm not reminded every week by this incessant cry to surrender our humanity to "AI" – that a lot of folks in and around ed-tech are simply not good people. This wasn't prompted by the latest MrBeast stunt, although hey, he seems like such an utter jerk. (Reminder: he's an advisor apparently to Alpha School).

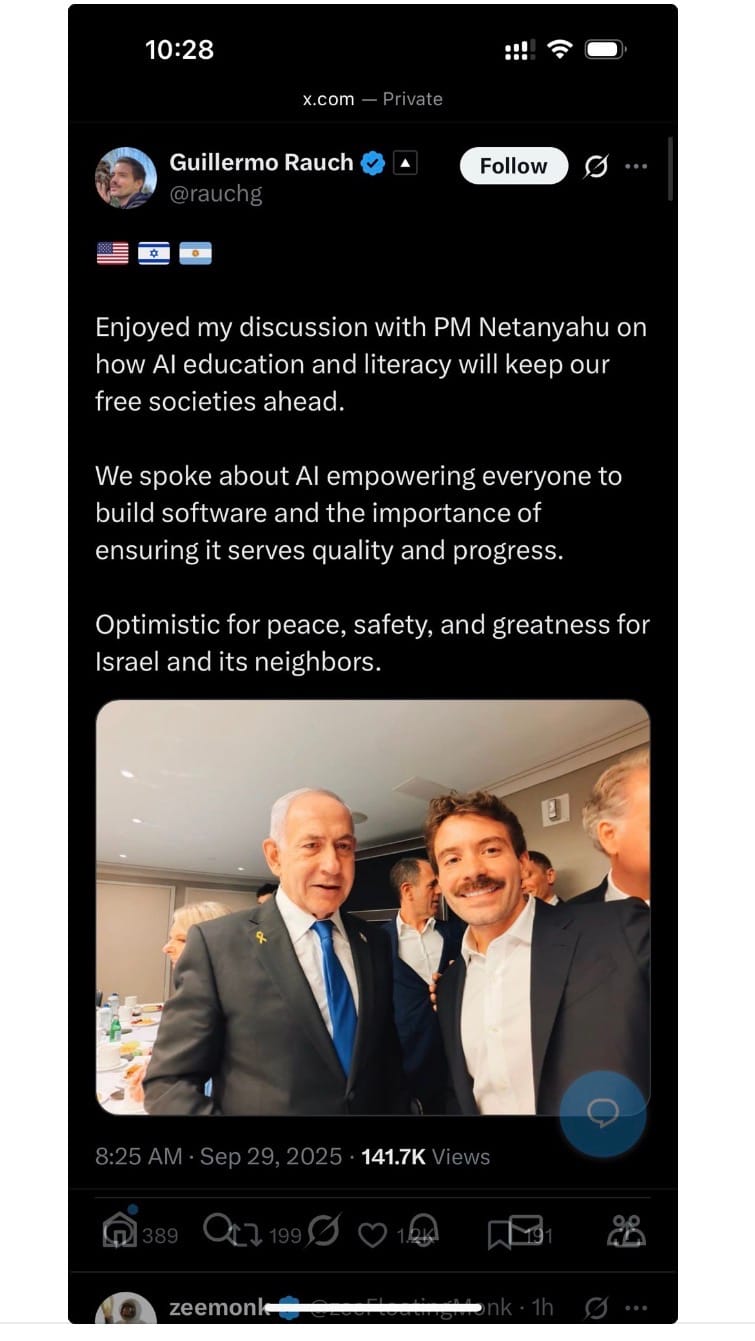

It was this selfie from Guillermo Rauch, now the CEO of the AI company Vercel and once upon a time the CTO of an ed-tech startup called Learnboost:

Never say anything nice about an ed-tech company. At best, you will be very disappointed. At worst, you might find yourself applauding genocide.

Today's bird is the malachite kingfisher, one of the hundreds of species of birds that can be found in Gombe National Park in Tanzania – a site most famous for its great ape, not its avian population. The park was where Jane Goodall conducted her research on chimpanzees in the 1960s. May her legacy be peace and compassion and justice for all living creatures.

Thanks for reading Second Breakfast. Please consider becoming a paid subscriber. Your financial support enables me to do this work.