Do Not Obey in Advance

Happy New Year! Let's all take a deep breath, because 2025 going to be a weird one.

"Do not obey in advance," historian Timothy Snyder cautions. "Most of the power of authoritarianism is freely given. In times like these, individuals think ahead about what a more repressive government will want, and then offer themselves without being asked. A citizen who acts this way is teaching power what it can do." Snyder published these words in his bestselling book On Tyranny back in 2017, on the cusp of the new Trump administration. And here we are again, heading into another new year, another four of Trumpist horror.

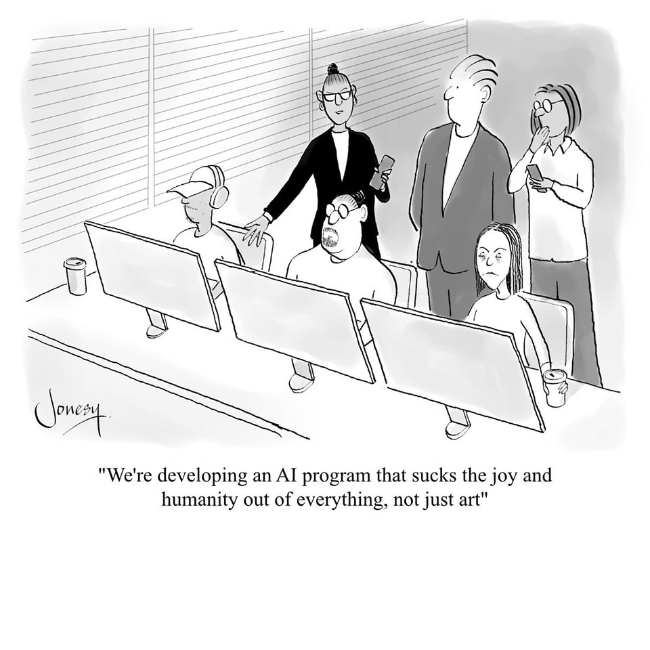

You can already see the ways in which people are obeying — many in the pundit class quite overtly with their calls to “make peace“ with MAGA. But there’s aways that more subtle obsequiousness, I think that we need to be on guard for, and I do wonder if the embrace of artificial intelligence isn’t also, in its own way, a surrender to authoritarianism.

Or, if it makes you feel better, maybe it’s not a surrender; maybe it’s just how some people are trying to cope.

The popular narrative that explains the recent explosion in AI points to breakthroughs in technological advancements, and certainly that's one way to tell the story – more GPUs, more data, fine-tuned algorithms, and so on. But you could also argue that there have been other massive shifts – cultural, sociological, and economic shifts – that have made people "offer themselves without being asked," to borrow Snyder's phrasing. These include the proliferation of misinformation, ever-growing economic inequality, and the threats from ongoing environmental degradation (including pandemics). AI doesn’t fix any of these; indeed, it makes things worse, more unknowable (and sometimes, I dare say, all that is the explicit goal — making things worse — particularly for tyrants, for tech titans, for those who care nothing for community, who’d like to have robots replace a disruptive and expensive workforce).

As life has become more and more overwhelming, people are encourage to embrace AI as a potential arbiter of "truth." (It's so clearly not — generative AI generates bullshit — but that hardly matters when folks are so fearful, when everything feels so precarious.) In the face of great uncertainty, there is comfort, perhaps, in the Boolean. Furthermore, with its algorithmic decision-making, AI enables an evasion of accountability and responsibility; it promises plausible deniability – for individuals and institutions, practically and psychologically – and as such, it seems perfect for surviving, if not thriving, in a world of austerity and tyranny.

Trumpism, that is to say, might just explain the rise of AI as well as any technological advance — the dream of unconstrained command and control.

Technology is not the sum of the artifacts, of the wheels and gears, of the rails and electronic transmitters. Technology is a system. It entails far more than its individual material components. Technology involves organization, procedures, symbols, new words, equations, and, most of all, a mindset. – Ursula Franklin, The Real World of Technology

Shortly after Trump's inauguration in 2017, I gave a talk about what I thought folks in education (and ed-tech in particular) should be wary of regarding the incoming President and his goals for education – namely, I cautioned about the continued push for massive data collection about students. While often framed as attempts to identify "students at risk" – ah, how we love to frame surveillance as care – under an overtly racist, nationalist, and transphobic administration, this actually means identifying students as risks. And already we can see how seemingly mundane data collection – student enrollment and financial aid information, for example – can be weaponized for deportation sweeps and so on. It's never really about protecting students. It’s about command and control.

Although much of the hubbub about AI involves the latest chatbot gimmickry, we should remember that schools have already adopted all sorts of automated systems – predictive algorithms, learning analytics, test proctoring – in their administrative and pedagogical software-services. The ideological underpinnings of AI – centralization of power, resource extraction, austerity, surveillance – are already deeply intertwined with many of our educational practices.

That's depressing, I realize. Changing these systems will be incredibly difficult. The road ahead is very, very daunting.

If it’s any consolation, I think we can point to this latest round of AI hype and say with some confidence that much of it is little more than another round of snake oil. Indeed, many companies are simply slapping the words "artificial intelligence" onto their existing offerings. Most obviously, what used to be sold as "personalized learning" is now suddenly AI. Encyclopedia Britannica is now positioning itself an AI company, for crying out loud; and while I don't think it plans to go back to door-to-door sales of teaching machines, it says it plans to sell teaching-machine software – excuse me, "AI" – to schools. “Arizona’s getting an online charter school taught entirely by AI," Techcrunch reported last month. But it turns out – no surprise – that the "interactive, AI-powered platforms that continuously adjust to [students'] individual learning pace and style" is really just Khan Academy and IXL. The big “innovation” here isn't AI; it's that the school has no teachers. And let's be honest: that's not a marker of some technological breakthrough; it's a political decision, one based on an abhorrence for public education and teachers' unions.

"Do not obey in advance." And yet that's precisely what much of ed-tech, for so long now, has demanded we do. (Not all ed-tech, of course. Not Sesame Street, for example. But I guess we're gonna give up on that progressive vision of teaching and learning with technology. And arguably, it seems we already did.)

One of the drawbacks to taking a break from newslettering over the holidays is that the news doesn't stop. Indeed, some folks love to issue those last-minute press releases, not because everyone in marketing wants to cross that one last thing off their To Do list before heading out the door, but because they hope that, by mid December, already no one is really paying attention. Companies can announce that their AI has solved some tricky math puzzles – "solved abstract reasoning," even – and hint that it's reached AGI; journalists can observe that it appears as though what counts as AGI has been revised around financial rather than cognitive capabilities; companies can say they’re bringing the “dead internet theory” to fruition, that they plan to flood their social media platform with bots; journalists can discover the dangerous implications of AI on the electricity grid; they can uncover how companies are promoting AI-generated content in their algorithms so as to avoid paying royalties to artists. But then – fingers crossed!! – when everyone returns from the holidays, our collective memories will have been purged of the perils, promises, and predictions made in the waning weeks of the old year.

The Internet isn’t breaking. Beneath the Wikipedias and Facebooks and YouTubes and other shiny repositories of information, community, and culture—the Internet is, and always has been, mostly garbage. – Sarah Jeong, The Internet of Garbage

A few end-of-the-year links before you forget: Ben Riley interviews Bror Saxberg on "the role of knowledge in the age of AI." Josh Brake says "we're all lab rats now." "Beware of metacognitive laziness: Effects of generative artificial intelligence on learning motivation, processes, and performance" – new research from Yizhou Fan, Luzhen Tang, Huixiao Le, Kejie Shen, Shufang Tan, Yueying Zhao, Yuan Shen, Xinyu Li, and Dragan Gašević. Laura Hartenberger on "what AI teaches us about good writing." A reminder from Dan Cohen on what AI corpora are missing: books. He has "a Mellon-funded project to develop an ethical, public-interest way to incorporate books into artificial intelligence." "AI agents will be manipulation engines," Kate Crawford cautions. "Never forgive them," Ed Zitron says (not succinctly, but — bless his heart — very passionately). L. M. Sacasas on automated decision-making (or rather the delegation of decision-making to machine). He cites Lewis Mumford:

...We must return to the human center. We must challenge this authoritarian system that has given to an under-dimensioned ideology and technology the authority that belongs to the human personality. I repeat: life cannot be delegated. – Lewis Mumford, "Authoritarian and Democratic Technics"

Thanks for subscribing to Second Breakfast. Paid subscribers will receive an essay on Monday on Rip Van Winkle and ed-tech amnesia — if I can pull off the analogy.