Luddites Win

Happy Friday! What's good?

I've picked up many, many new subscribers to Second Breakfast this week. Welcome. And thank you all for making me feel like my decision to re-enter the ed-tech fray and write another book is a good one. I started Second Breakfast because I very much didn't want to do either of those things, but thanks to time, therapy, and some real dangerous and dumbass stuff I've read lately from various ed-tech luminaries, I feel like I should. I feel like I can.

I started this newsletter to explore my thoughts on my own body and grief, and I quickly recognized how some of the same trends I'd chronicled in education technology – personalization, quantification, efficiency, optimization, for example – were apparent in health technologies as well. Indeed, Silicon Valley's would like to engineer and automate all aspects of our lives – not just school and work, but leisure as well. Everything we do is now organized and tracked by some piece of the "productivity suite" – the tool, the ideology. We send calendar invites for dinner dates. We make Google Docs for grocery lists. We create spreadsheets to track the books we've read, the miles we've ran. We use bullet journals. It's all supposed to be some sort of "life hack" – except nothing is getting easier or better; we're not happier. Everything's just getting more hackneyed, more hurried, more chopped up and garbled and shredded. We can feel it. Everyday everything is more and more fragile, more and more precarious.

I mean, no wonder some people are trying to sell us AI in response: we spend our time clicking on terrible software, generating useless data and pointless updates. Humans crave meaning, but instead of recognizing that productivity software has forced us to spend our days churning out a lot of meaningless shlock – instead of putting an end to a system full of "bullshit jobs" – folks now want to sell us AI to scour for some meaningful patterns amongst all the bullshit. We're creating more bullshit work, more bullshit tasks, more bullshit jobs.

Anyway.

As I once did on Hack Education, every Friday, I round up the latest week's news. This newsletter is now going to be more stories about AI – for which I do apologize profusely, because it stinks. Nonetheless this task of rounding up the week's news helps me start to identify the dominant cultural narratives surrounding our future. And – sound the humanities klaxon – I do think what we're experiencing now is just as important to consider as a social/political/cultural phenomenon as it is a technological or scientific one. Maybe even more so.

I've tried very hard to avoid almost all news about ed-tech for the last few years. And to be honest, I plan to continue to do so. (Surprise surprise: my book isn't going to be about the "Top Five Ways Teachers Can Use AI in the Classroom" or the "Eight Reasons Why the Latest Version of ChatGPT Will Write Better Chemistry Textbooks" or "Six Bad Things about AI and the One Nice Thing My Editor Made Me Include for Balance.")

I want to write a book that won't be dismissed as out-of-date the moment it gets published because everything is changing so very very fast. I mean, so much has changed since I stopped writing Hack Education in 2022. Oh. Wait. Hahahahahahaha. Hahahahahahaha. Gasps for air. Hahahahahaha. Honestly, my book could come out in 2032 and I bet Sal Khan and Bill Gates will still be out there hustlin', acting like AI is brand new, never before used in classrooms, doing things teachers cannot possibly do, like – and I kid you not, this is an example from Khan's new book – come up with assignments that "allow students to give free responses when discussing a text." I don't plan to pay much attention to the ed-tech press releases because, like, why would anyone?! Please don't pitch me on your startup – even if you've decided to add "AI" to the little blurb about what you're building. I will, as my friend Dan has warned, destroy you.

So I'm looking to piece together some big-picture narratives about work, school, knowledge, learning, and the epistemological devastation that Silicon Valley seeks to unleash. This sort of thing...

Robots and labor: "Yes, the striking dockworkers were Luddites. And they won," says Brian Merchant. "Do U.S. Ports Need More Automation?" asks Brian Potter. "‘I Applied to 2,843 Roles’ With an AI-Powered Job Application Bot," writes Jason Koehler in 404 Media. (This reminds me of the AI ouroboros of plagiarism-detection software-makers who also sell students algorithmically-generated essays.)

Knowledge machines: "The Editors Protecting Wikipedia from AI Hoaxes" via 404 Media. "The more sophisticated AI models get, the more likely they are to lie," says Ars Technica. Via The Washington Post: "Who uses public libraries the most? There’s a divide by religion, and politics." Also from The Washington Post: "No time to read? Google’s new AI will turn anything into a podcast." (FWIW, here are my thoughts on the Google Notebook LLM and that article in The Atlantic contending that college students don't read.) "Bullshit context and the Meta AI" by Mike Caulfield (who also wrote this week that "AI in Search Is Really the Race for the Overlay").

Go read those last two pieces, and then come back (and finish this very long email). I'll wait...

Mike argues that the goal of AI in search should be to give you the right information at the right time. That's the "overlay," he says (which he links back to Vannevar Bush and the Memex. Know your history). But I'm not sure that what we (I use that plural first person very very loosely here) care about right now is "the right information." In Monday's Garbage Day newsletter, Ryan Broderick argued that there's a growing number of sectors of society that really aren't phased if something they post is incorrect as long as it feels true. Indeed, this has been a fundamental piece of the right-wing's response to fact-checking for almost a decade now.

Now, thanks to generative AI, this willingness to embrace as "truth" something that is utterly severed from "fact" has become a real selling point – and we are on the brink of a new epistemology with pretty terrible implications not just for knowledge but for society. “You cannot be a useful civic resource and also give your users a near-unlimited ability to generate things that are not real," Broderick writes. "And I don’t think Meta are stupid enough to not know this. But like their own users, they have decided that it doesn’t matter what’s real, only what feels real enough to share.” (emphasis mine.)

More AI history: "Is a Chat with a Bot a Conversation?" asks Jill Lepore.

The science of the mind: "How to think critically about the quest for Artificial General Intelligence" by Benjamin Riley. "An adult fruit fly brain has been mapped — human brains could follow," says The Economist. "Scientists say they've traced back the voices heard by people with schizophrenia," Futurism reports. My PTSD treatment is going very well, thanks for asking, but I’m not sure how much I trust anything that psychology says it’s discovered about the mind. And I’m not alone: psychologist Adam Mastroianni on some of the problems with the discipline – or, "the mystery of the televised salad."

This could still be a fitness technology newsletter: The New York Times on "The Robotic Future of Pro Sports." "The Problem with Tracking Sleep Data" – Alex Hutchinson argues that even sleep researchers don't really know what to make of all this data. Not that that’s going to stop any company from gathering it, yo.

Death drive, part 1: "Human Longevity May Have Reached its Upper Limit," says Scientific American. So, you mean the latest craze(s) promoted by the aging Silicon Valley elite might have no foundation in science!? I guess we're just going to have to upload our minds into machines if we want to live forever — something totally scientifically plausible. Side eye.

Death drive, part 2: "Former Google CEO Eric Schmidt says we should go all in on building AI data centers because 'we are never going to meet our climate goals anyway'." Man, fuck that guy.

Elsewhere in tech culture: "Tech has never looked more macho," Caitlin Dewey contends. "Roblox Is Somehow Even Worse Than We Thought, And We Already Thought It Was Pretty Fuckin’ Bad." Esquire on AI girlfriends. "Hacked ‘AI Girlfriend’ Data Shows Prompts Describing Child Sexual Abuse" via 404 Media.

OpenAI, downgraded: "It’s Time to Stop Taking Sam Altman at His Word," says David Karpf. Enough of talking about what AI might do someday; let’s talk about what it’s doing now. Elsewhere in OpenAI news, it’s good news / bad news, both from Wired. "The OpenAI Talent Exodus Gives Rivals an Opening.” But "The Race to Block OpenAI's Scraping Bots is Slowing Down."

AI meme-hustling: From the Stack Overflow blog: "Meet the AI-native developers who build software through prompt engineering." "AI-native" – LOL. Judy Estrin makes "The Case Against AI Everything, Everywhere, All at Once." (When you've lost Time Magazine...)

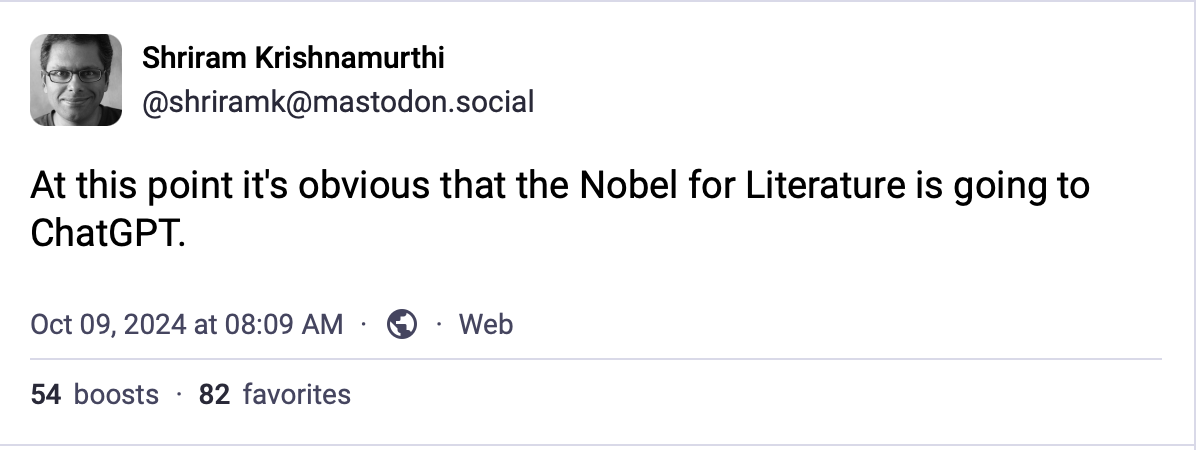

Random factoid: I went to high school with a lot of folks from Scandanavia, and "jævla svensker" is the only phrase I know how to say in Norwegian. Meanwhile, in Sweden: "Nobel Prize In Physics Awarded To Alien Giving Peace Sign Driving Tie-Dye VW Bug." Oops, that was The Onion. Apparently, "Nobel Physics Prize Awarded for Pioneering A.I. Research by 2 Scientists" via The New York Times.

(Funnily enough, "jävla danskar" is the only thing I can say in Swedish.)

Thanks for subscribing to Second Breakfast. Paid subscribers will hear from me on Monday too, where I'll write some more about what I'm reading/researching for the book, as well as random thoughts about the world-at-large (I saw Hannah Gadsby's show on Wednesday, and they talked about grief and AI. So I'll have something to say about that, perhaps). And I'll continue to keep you posted, I promise, on how my running, weightlifting, ballet-dancing, and eating are all going.