More Workslop for Mother

Brian Merchant's assertion that "The Luddite Renaissance is in full swing," The Jacobin's claim that "The AI Revolution Might Be Running Out of Steam" – these feel a bit too optimistic perhaps, particularly if you're one of many educators who's been compelled these past few weeks/months to sit in back-to-school training sessions in which administrators crow about whatever "AI" product they purchased last spring: how it's poised to allow you to "do more" [unspoken: with less]. "AI" as counseling. "AI" as advising. "AI" as tutoring. "AI" as grading. "AI" as curriculum development. "AI" as reporter. "AI" as researcher. "AI" in the LMS. "AI" in test proctoring. "AI" everywhere, whether you like it or not.

"AI will save you so much time," management insists, with this as with every new piece of hardware and software they force workers to use, never ever admitting their own complicity in why everyone is so overworked in the first place. Instead – and we all know this in our guts – they're going leverage "AI" to threaten and to eliminate jobs, to refuse to hire replacements, to diminish everyone's creativity and autonomy, to lower everyone's standard of living except – oh, interesting – their own. (Echoes of Marc Andreessen here, who's certain that "AI" could never replace venture capitalists.)

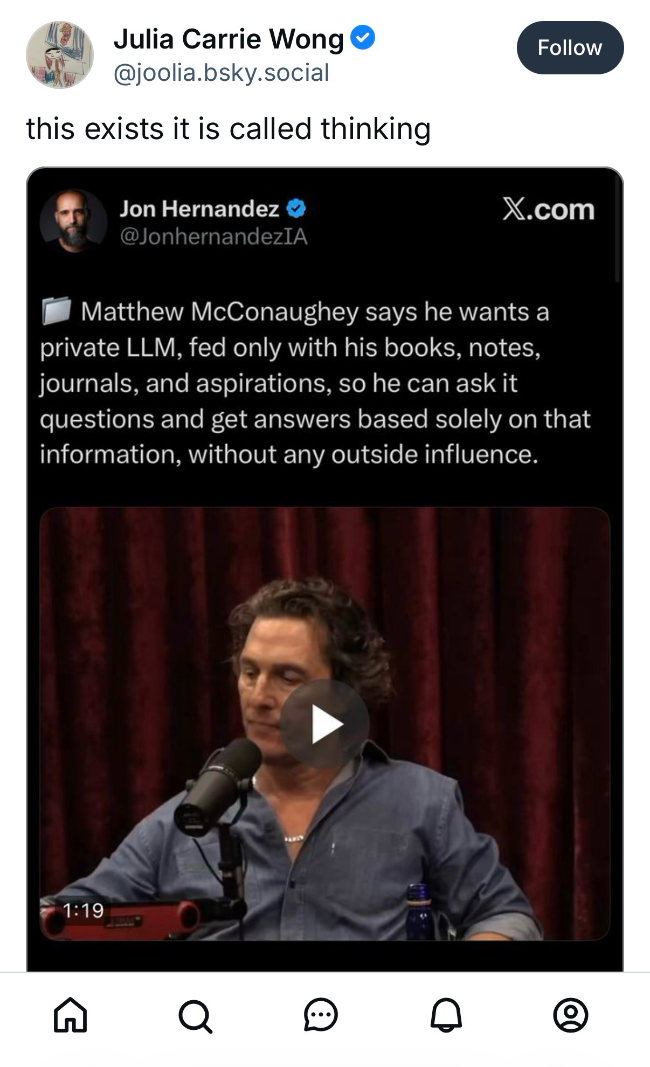

But maybe "AI" is finally finally finally running out of steam. Maybe as n+1 writes (in a little nod to Thomas Pynchon's 1984 essay so it has been a long time coming), "It's okay to be a Luddite!"

(It is! It is!)

The tenor of a lot of reporting about "AI" has, no doubt, shifted. It shifted with the flop of ChatGPT 5. It shifted with the NYT story on Adam Raine's suicide. It shifted with the MIT study that found 95% of AI pilots fail. Oh sure, there are still those who try to keep cheerleading – The Wall Street Journal, for example, says "Stop Worrying About AI’s Return on Investment." And there are those who signed multi-million-dollar deals with OpenAI and Anthropic and Google earlier this year who really don't want to look like they were duped (not to mention those who've staked new careers and new identities on some glorious "AI" future, who probably don't want to look like they were part of a con).

But the emporer, as that little boy in Hans Christian Andersen's story pointed out, wears no clothes.

"AI ‘Workslop’ Is Killing Productivity and Making Workers Miserable," 404 Media reported this week, pointing to a handful of the recent articles – journalistic, academic, and otherwise – that reaffirm what many of us already knew: this stuff sucks, and those who keep asserting that it's amazing are probably not the people who have to go back and clean up all the mistakes and finesse all the banal verbosity that's been "generated" by a chatbot.

"Workslop" is a great little neologism, and it feels like it can easily be applied to education – not just to the "AI"-generated essays, but to the "AI"-generated textbooks and tests and curriculum and handouts. The researchers/consultants who coined the term define "workslop" as "AI generated work content that masquerades as good work, but lacks the substance to meaningfully advance a given task." The assignment is complete; and yet nothing has been done, nothing has been taught, nothing has been learned. Workslop "shifts the burden of the work downstream," they write, "requiring the receiver to interpret, correct, or redo the work. In other words, it transfers the effort from creator to receiver."

More Work for Mother – first published forty years ago – remains as relevant as ever, particularly as "AI" colonizes work and leisure, the job and the home. I think I saw Tressie McMillan Cottom post something on social media a while ago, something like "men use AI to do less work, and women use AI to do more." Which tracks. And it tracks in education too where the "downstream" the authors above point to involves the kind of care work, the kind of group work, the kind of emotional and relational work, that has never been valued but that is absolutely necessary for the generous reading and listening that teachers and students must do together.

The San Francisco Standard's Ezra Wallach reported on the opening of an Alpha School in the city. Sigh, you know: Mackenzie Price's "2 hour learning" private school hustle: "It’s the city’s new most expensive private school — and AI is the teacher." I'm quoted calling the whole thing "snake oil," which makes me extraordinarily happy. Sorry not sorry.

I told Wallach that this push for "personalized learning" – everyone's just rebranded this as "AI" now – is no damn good as it disrupts this relational, reciprocal aspect to learning. When we isolate everyone on a screen, via an algorithm, and pretend the primary values in education are efficiency, optimization, and "individualization," then we lose all sense of community, all sense of responsibility to one another. And that is how we learn – in relationships with people, their words, their ideas, their embodied selves.

"AI" is damaging and dangerous because it is profoundly anti-democratic – this concerted effort to undermine public education is just one part of it. The "AI" industry is firmly committed to centralizing control of information – control of creativity, decision-making, work, health, prediction, policing, teaching, learning (that is, ostensibly, everything). And centralizing control in the hands of a bunch of villains, monsters, dickheads, dumbasses to boot – one of whom is openly toying with the idea of being the Antichrist.

And yeah, these fellows have plans for schools (although, if it's at all reassuring, they've been working on these plans since at least 1970 and have never get very far because they're losers and nobody likes them). A few highlights:

- "Could the Supreme Court outlaw public schools?" Jennifer Berkshire asks historian Johann Neem.

- Oklahoma's State Superintendent Ryan Walters says all public schools in the state will have Turning Point USA chapters in order to counter "radical leftist teachers unions” and their “woke indoctrination.” (Update: he just quit, so he can devote himself full-time to hassling teachers.)

- Speaking of Turning Point USA, Mike Masnick on "The Debate Me Bro' Grift: How Trolls Weaponized The Marketplace Of Ideas."

- Silicon Valley used to tout charter schools – for "other people's children," natch. Now they're into "microschools," private schools for the kids of their employees. Do these schools work? (Whatever that means.) "Researchers find it nearly impossible to gauge microschools impact," The 74 reports, because researchers don't know what or how to measure. One of the main reasons parents are sending their kids to these schools is to avoid all that standardized testing of the public school system. And I guess educational researchers don't know how to assess without assessments.

- "At This Rural Microschool, Students Will Study With AI and Run an Airbnb," says Edsurge. A lot of these microschools really lean into the whole cult of "entrepreneurship." The one featured here will run a business – property management, apparently – and "for students, the work they will do is unpaid," which is definitely giving kids a solid lesson what it's like to work at a venture-backed startup: how to make someone else rich.

- Michael Horn's still at it, I see, threatening that "Start-Up Culture Comes to K–12 Accreditation"

- "Another AI Side Effect: Erosion of Student-Teacher Trust," says Greg Toppo. Or maybe that the classroom has become "more transactional" is related less to "AI" and more to a much longer litany of dumb ideas and gadgets that investors and ed-tech hype-men keep proposing. "Disruptive innovation" ad nauseum.

It's never too late to say "I'm sorry," to admit that you made a mistake. It's never too late to quit. Hell, look at what Steven Levy – Steven friggin' Levy – wrote this week: "I Thought I Knew Silicon Valley. I Was Wrong."

As we train our sights on what we oppose, let’s recall the costs of surrender. When we use generative AI, we consent to the appropriation of our intellectual property by data scrapers. We stuff the pockets of oligarchs with even more money. We abet the acceleration of a social media gyre that everyone admits is making life worse. We accept the further degradation of an already degraded educational system. We agree that we would rather deplete our natural resources than make our own art or think our own thoughts. We dig ourselves deeper into crises that have been made worse by technology, from the erosion of electoral democracy to the intensification of climate change. We condone platforms that not only urge children to commit suicide, they instruct them on how to tie the noose. We hand over our autonomy, at the very moment of emerging American fascism.

-- "Large Language Muddle," the editors of n+1

Thanks for subscribing to Second Breakfast. Please consider becoming a paid subscriber. Your support enables me to do this work.

Today's bird is the California condor, which became extinct in the wild the 1980s but has been successfully bred in captivity and reintroduced into California, Utah, and Arizona. (It remains critically endangered.) And while puppet-rearing has been used to raise newborn birds – a simulation of an adult bird that feeds the young so that it doesn't imprint on a human – the condors do say that they'd rather raise their own children, thank you very much, so please stop poisoning the planet. Also "AI" – puppet-rearing of a slightly different sort – is so ludicrous can you even hear yourself with this bullshit, driving yourself to extinction?!