The Broken Record

I was amused to read Dan Meyer’s account of the recent AI+Education Summit at Stanford, particularly the remarks made by the university’s former president, John Hennessy, who asked the audience if anyone remembered “the MOOC revolution” and could explain how, this time, things will be different. The panelists all seemed to assert that -- thanks to “AI” -- the revolution is definitely here. The revolution or, say, a tsunami -- the word that Hennessy used back in 2012 when he himself predicted a sweeping technological transformation of education -- a phrase echoed in so many stupid NYT op-eds and pitch decks. Dan recalled the utterance, but no one else seemed to -- at least no one on stage or in the audience seemed to have the guts to turn to Hennessy (or any of the attendees or speakers, many of whom were also high on the MOOC vapors) and call him on his predictive bullshit.

As Dan correctly notes,

Look — this is more or less how the same crowd talked about MOOCs ten years ago. Copy and paste. And AI tutors will fall short of the same bar for the same reason MOOCs did: it’s humans who help humans do hard things. Ever thus. And so many of these technologies — by accident or design — fit a bell jar around the student. They put the kid into an airtight container with the technology inside and every other human outside. That’s all you need to know about their odds of success.

The odds of success are non-existent. There will be no “AI” tutor revolution just as there was no MOOC revolution just as there was no personalized learning revolution just as there was no computer-assisted instruction revolution just as there was no teaching machine revolution. If there is a tsunami, it’s not technological as much as ideological, as the values of Silicon Valley -- techno-libertarianism, accelerationism -- are hard at work in undermining democratic institutions, including school.

The history of failed ed-tech startups and ed-tech schools is long, and yet we’re trapped in this awful cycle where investors and entrepreneurs keep repackaging the same bad ideas.

There was another story this week on Alpha School, this one by 404 Media’s Emanuel Maiberg: “’Students Are Being Treated Like Guinea Pigs:' Inside an AI-Powered Private School.” Back in October, Wired documented the miserable experiences of students, forced into hours of repetitive clicking on drill-and-kill software under incessant surveillance. Maiberg’s reporting, in part, expands on this, as he writes about the goal of building “bossware for kids” -- that is ways to identify “enhanced tracking and monitoring of kids beyond screentime data.”

But much of Maiberg’s story examines the use of technologies to build the “AI curriculum” touted by the school’s founders. Not only does Alpha School’s reliance on LLMs for creating curriculum, reading assignments, and exercises mean these materials are littered with garbled nonsense, but the company seems to also be scraping (i.e. stealing) other education companies’ materials, including those of IXL and Khan Academy, for use in building their own.

While I deeply appreciate Maiberg’s reporting here -- I am a huge fan of 404 Media and am a paid subscriber because I think investigative journalism is important and necessary -- this story is a huge disappointment because it does not push back at all on the underlying ideas of Alpha School. Indeed, this is precisely the problem that keeps us trapped in this “ed-tech deja vu” -- the one that has, just in the last couple of decades, recycled this same idea over and over and over again (funded and promoted, it’s worth noting, by the very same people -- the Marc Andreessens and Reid Hoffmans and Mark Zuckerbergs of the world): Rocketship Education. Summit Learning. AltSchool. And now Alpha School.

Maiberg suggests in his story (and more explicitly on the podcast in which he and the publication’s other co-founders discuss the week’s articles) that Alpha School’s idea of “2 hour learning” is a good idea. But I think that claim -- the school’s key marketing claim, to be sure, before, like everyone else, it started to tout the whole “AI” thing -- needs to really be interrogated. Why are speed and efficiency the goal? These are the goals of the tech industry’s commitment to accelerationism, yes. These are the goals for a lot of video games, where you grind through repetitive tasks to accumulate enough points to level up. But why should these be something that schools embrace? Why should these be core values for education? Does learning -- deep, rich, transformative learning -- ever actually happen this way? (And what else are we learning, one might ask, when we adopt technological systems and world views that prioritize these?)

Let me quote math educator Michael Pershan at length here:

I keep coming around to this: the interesting innovation of Alpha School is not their apps or schedule or Timeback but their relationship to core academics. This is a school that believes that the “core” of schooling should be taken care of as quickly and painlessly as possible so that the rest of the day can be opened up to things that actually matter. Most schools don’t do this! We instead tell kids that history is a way of understanding ourselves and others. Math, we say, can be an absolute joy, full of logical surprises. We tell kids that a good story can open up your heart and mind.

Alpha doesn’t. They aim to streamline and focus on the essentials for skill mastery. Maybe they are showing you can learn to comprehend challenging texts without reading books. Maybe a math education composed of examples and (mostly) multiple choice questions is, in reality, all you need to ace the SAT.

If it turns out they’re succeeding at this, it’s because they’re trying.

And maybe, one day, Alpha or someone else will crack the code for good. It then will be possible to get all students to grind through the skills and move on. With all that extra time, schools will find better things for kids to do than academics. And maybe, at some point, we’ll ask, what’s the point of grinding through things we don’t care about? Do we really need to become great at mathematics when machines can do it? How important is it really to learn how to read novels or fiction? Maybe, one day, this is how books disappear from schools for good.

The schools like Alpha School, AltSchool, Summit, and Rocketship are all strikingly dystopian insofar as they compromise, if not reject, any sort of agency for students; they compromise, if not reject, any sort of democratic vision for the classroom. School is simply an exercise in engineering and optimization: command and control and test-prep and feedback loops. There is no space for community or cooperation, no time for play -- there is no openness, no curiosity, no contemplation, no pause. There is no possibility for anything, other than what the algorithm predicts.

(Kids hate this shit, no surprise. They want to be human; they want to be with other humans, even if tech-bros try to build a world that’s forgotten how.)

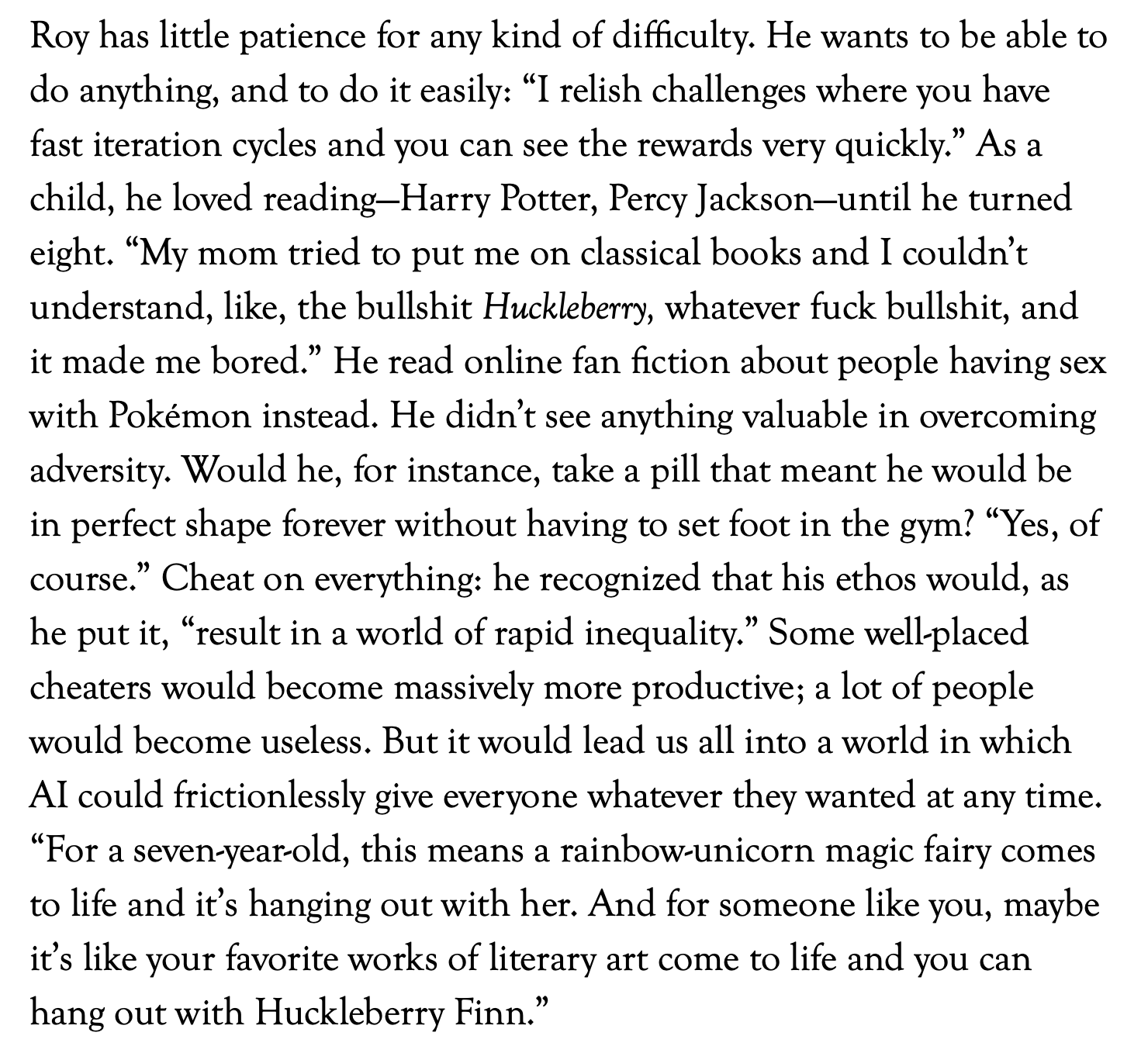

Or rather, most kids hate this shit. There are a few who embrace it because if they play the game right, they reckon, they too can join the tech elite. Case in point, yet another profile of Cluely founder Roy Lee, this one by Sam Kriss in Harper’s: “Child’s Play: Tech’s new generation and the end of thinking.”

I find this insistence from certain quarters that “there is no evidence that social media harms children” to be pretty disingenuous. There’s a lot of evidence -- plenty of research that points to negative effects and sure plenty that points to positive effects of technology, so it’s a little weird to see efforts to curb kids’ mobile phone and social media usage as just some big conspiracy for Jonathan Haidt to sell more books.

Mark Zuckerberg took the stand this week in a California court case that contends that Meta (along with other tech companies such as TikTok and Google) knowingly created software that was addictive, leading to personal injury -- and for the plaintiff in this particular case, leading to anxiety, depression, and poor body image.

That the judge in the case had to chastise Zuckerberg and his legal team for wearing their “AI” Ray-bans in the courtroom just serves to underscore how very little these people care for the norms and values of democratic institutions.

We see this in the courtroom. We see this in the media. We see this in schools -- from Slate: “Meta’s A.I. Smart Glasses Are Wreaking Havoc in Schools Across the Country. It’s Only Going to Get Worse.”

We see this in the billions of dollars that the tech companies plan to funnel into elections this year to try to ensure there are no regulatory measures taken to curb their extractive practices -- $65 million from Meta alone.

What on earth would make you think that tech companies -- their investors, their executives, their sycophants in the media -- want to make education better?

Inside Higher Ed reported this week that the University of Texas Board asked faculty to “avoid ‘controversial’ topics in class.” There weren’t any details on what this meant -- what counts as “controversial” -- or how this might be enforced. (Meanwhile in Florida, college faculty were handed a state-created curriculum and told to teach from it.)

We are witnessing the destruction – the targeted destruction – of academic freedom across American universities. This trickles down into all aspects of education at every level.

And to be clear, again, my god, I'm a broken record too: this is all inextricable from the rise of “AI,” from its injection into every piece of educational and managerial software. The tech industry seeks the monopolization of knowledge; they seek the control of labor – intellectual labor and all labor, “white collar” and “blue collar” is intellectual labor. They worship speed and efficiency, not because these values are democratic, but precisely because they believe they can make us bend our entire beings towards their profitability.

Perhaps ed-tech is, in the end, simply "optimistic cruelty"; and these cycles that we keep going through are just repeated and failed attempts to replay and harness Ayn Rand's bad ideas, her mean-spirited visions for a shiny, shitty technolibertarian future – one in which children (other people's children, of course) are the grist for the entrepreneurial mill.

More bad people doing bad things in ed-tech:

- “Ex-NYPD Official Indicted for Accepting Bribes From Tech Exec in Scheme” reports The City. The company in question here is Saferwatch, which sells panic buttons to schools.

- “Epstein ties to former Lifetouch investor prompt Dearborn Schools to cancel photo day.” School photos and the Epstein circle – greeeeaaaat.

Today’s bird is the pigeon, because yeah, we are still living in B. F. Skinner’s world -- one where people will look you in the eye and say that being “agentic” means handing over all your decision-making to their system, that “freedom” and “dignity” don’t really matter because their brilliant engineering is going to make everything fine and dandy. This time. Really. It’s a revolution. It’s a tsunami. It’s a shit storm.

“Russian Startup Hacks Pigeon Brains to Turn Them into Living Drones.”

Thanks for reading Second Breakfast. Please consider becoming a paid subscriber. Your financial support is what enables me to do this work.