The Prime Directive

Over a decade ago, at the height of the MOOC madness, many people crowed excitedly about "the end of college" – not because the various technologies they believed would enable this were any good (by "good" I mean either "pedagogically sound" or "at all pleasant to use"), but because they hoped these technologies could crack open new markets to more readily extract public dollars, public knowledge, and public data. (Cory Doctorow's model for "enshittification" does not apply to ed-tech, I should note here, mostly because it is shitty from the get-go.)

Oh sure sure, some of this push for ed-tech in the 2010s was wrapped in the language of expanding access and leveling playing fields, but this was mostly empty rhetoric from a bunch of affluent white men who seemed quite bitter that higher education was no longer their fiefdom (even though it absolutely still was and is), a place where their privilege was readily replicated and secured (even though it absolutely still was and is). Buoyed by the arguments of (and often funding from) Peter Thiel – that college was a "bubble," a bad investment, not worth it – all levels of education have been mercilessly attacked, from within and without, taken over by business interests and particularly business-of-tech interests who seek to manage, innovate, and destroy. Everything and everyone is a machine to optimize and control. Everything and everyone is a market to be exploited. Teachers and students are utterly, utterly expendable – as Columbia University made so very clear this week.

The learning management system, as I have argued for so long, is one of the main control levers for this – the reduction of everything "pedagogical" to a data transaction that can be, as the name suggests, systematized, delegated, assessed, extracted, measured, managed. The university, the school becomes simply a platform, so that "software can eat the world" – or at the very least eat an institution that venture capitalists / techno-fascists blame for rotting the minds of students with progressive ideas about economic justice and civil rights. (Related: "PragerU’s Plan to Red Pill Our Kids")

That Instructure would partner with OpenAI – just one of a series of coup de grâce this week from the forces that have been trying to deal death blows to schools for decades now – should surprise absolutely no one. (What are the "routine and low-value tasks" that are going to be automated, I wonder. And why are educators and students doing these in the first place?) To force "AI" into the LMS means to compel "AI," to force into every student and teacher's work – an act that, much like the LMS itself, really benefits no one as much as it benefits the tech industry itself.

As Brian Merchant argued this week, few people are actually clamoring for "AI"; its growing usage is the result of our being "force-fed" this technology: "Big tech’s deployment of AI has been both relentless and methodical.... By inserting AI features onto the top of messaging apps and social media, where it’s all but unignorable, by deploying pop-ups and unsubtle design tricks to direct users to AI on the interface, or by pushing prompts to use AI outright, AI is being imposed on billions of users, rather than eagerly adopted." Generative AI is not, as Josh Brake observes, drawing on the work of Ivan Illich, "convivial" – technology that one is forced to use can never be such. (So surely "anti-conviviality" is a charge we might make about a lot of educational spaces and educational technologies already, although I'm not sure I would agree with Illich that the solution is necessarily one of maximizing personal freedom – that's Peter Thiel's solution already.)

I suppose I have to say something about the Trump Administration's big reveal on Wednesday of its "AI Action Plan," something other than "at what point will the evangelists for 'AI' concede that they're pushing us all towards techno-fascism and war?" (Of course, as Sinclair Lewis famously said, "it is difficult to get a man to understand something when his salary depends on his not understanding it." And many men clearly feel powerful, validated, understood even by the sycophancy chit-chat machine.)

Tech Policy Press offers three major takeaways from the announcements: this is about "gutting regulations" (including copyright), challenging states' abilities to restrict "AI," and demanding ideological compliance in "AI" models – all of which will impinge on schools' and universities' functioning (although certainly the last one – the attack on so-called "woke AI" – might be the most obviously troubling). According to The New York Times, companies would not be able to receive federal funding unless their systems are "objective" and "free of ideology."

"It already is," "AI is just math" is already some bullshit I hear from certain quarters in ed-tech and beyond who balk at anyone's opposition to "AI" – which is sorta like saying Zyklon B was "just chemistry." For as Eryk Salvaggio writes, "All AI contains traces of ideology and is in turn steered by ideology" – "AI Could Never Be 'Woke'." "AI" will, however, be weaponized – it is already. If you don't see it, if you don't care, well, I don't know what to tell you.

The soon-to-be defunct (I guess?) Department of Education issued a "dear colleague" letter in conjunction with these AI announcements – and phew, money for AI-related contracts is pretty fucking rich, you gotta admit, considering the government is still withholding some $5 billion in basic funding that schools were supposed to receive July 1.

These grants, according to Secretary McMahon, will be used for "AI-based instructional materials" and "AI-enhanced tutoring" and "AI for jobs" – all of which feels very much like every other announcement for ed-tech grant program for the past twenty years or so, except someone went through and just replaced "personalized learning" and "learn to code" and "future ready" with "AI" (but then forgot to remove the words "ethical" and "accessible" since I don't think those ideologically-burdensome words are allowed any longer).

That's not my saying the Department of Education's "AI" stuff is so banal that it's fine. It's saying ed-tech's always been a long con.

The Ed-Tech Report Card

"'Someone's always watching': Gen Z is spying on each other" – and this clip from Ocean Vuong underscores the implication for the classroom:

"This ‘violently racist’ hacker claims to be the source of The New York Times’ Mamdani scoop." (As noted in last week's newsletter, this is a much bigger story than just Zohran Mamdani's college application. This hacker has records of the racial background of millions of students.)

New research from Neil Selwyn, Marita Ljungqvist, Anders Sonesson on teachers' use of generative AI (and surprise surprise, the technology does not save time but requires more time spent on prompting, checking, and rechecking). It is, as Jathan Sadowski called it years ago, "Potemkin AI," a system that requires humans acting like robots.

It could always get worse. Like, say, Grok, but for kids.

"Artificial Intelligence and Academic Professions" – AAUP has a bunch of recommendations.

Honestly, I just want one school – one! – to step up and say "no" to the ed-tech and AI vultures. I want one school to say "we trust our professors. We trust our students." "We are not your test bed. We are not here to be surveilled and data-mined for your monopolistic ends." "We believe in basic research – not just in science but in all the liberal arts, especially the humanities and social sciences which have some pretty goddamn important things to say about [waves hands around] all this." "We believe in building knowledge and building scholarship, and everything everything everything about the push-button promises of 'AI' is utterly antithetical to our mission." Optional, but implied: "fuck off. And take the LMS with you."

But the AAUP recommendations are, you know, a start.

From Helen Beetham, another banger: "Superintelligent Research."

The California Ideology:

"The Hater's Guide To The AI Bubble" – apparently this is a 55 minute read, and folks, I did not, as Ed advised, brew a pot of coffee and read the whole thing.

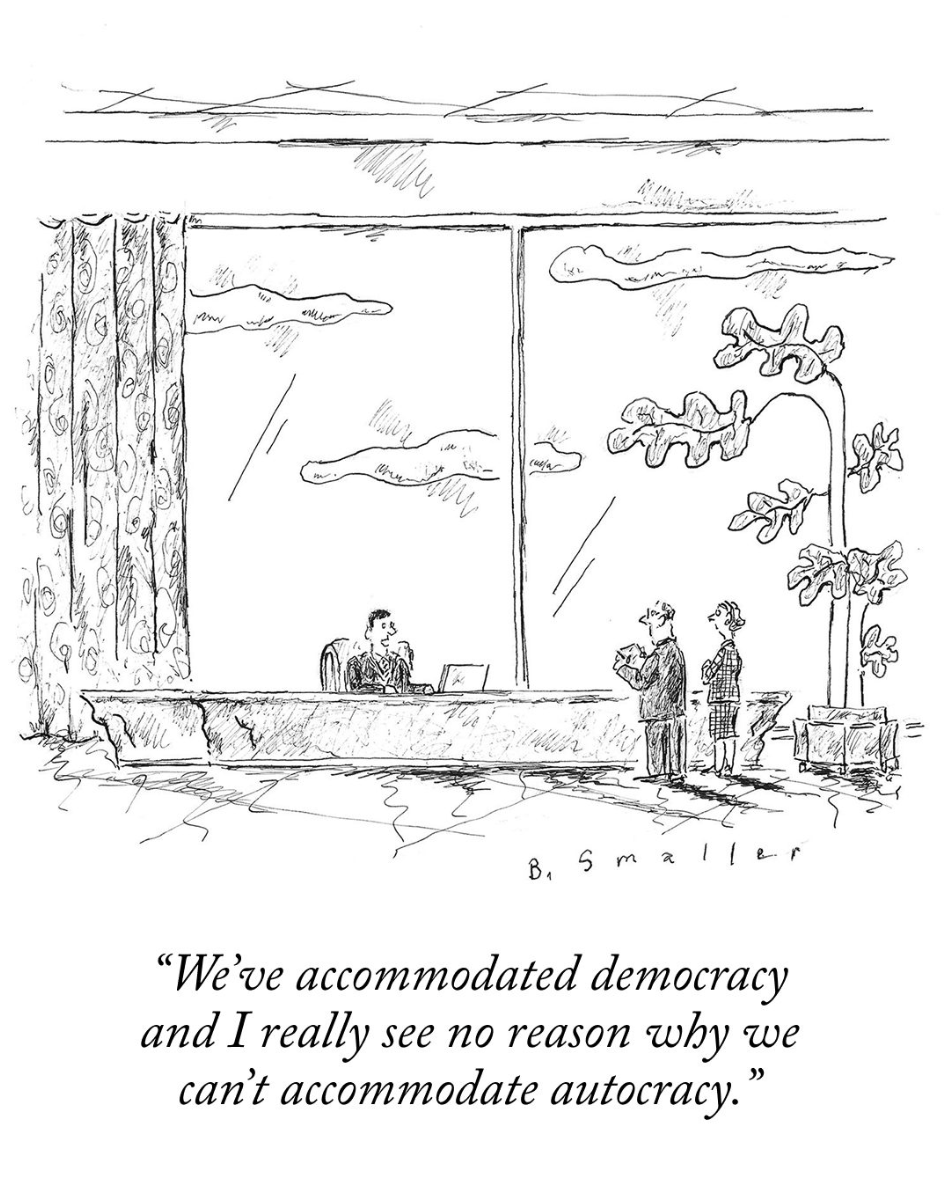

Edward Ongweso Jr is right: the tech oligarchs do not care – "This Silicon Valley Stuff'll Get You Killed." "This unholy alliance—far-right oligarch-ideologues who think democracy and capitalism are incompatible, tech firms with laboratories innovating the armament of fascism, financiers eager to transform speculation into wealth into power, and a host of other demoniacs—is relatively insulated from the public, its concerns, its pressures, its frustrations, and the few levers connected to those that could effect a change. And as a result, it enjoys relatively unimpeded power in building, expanding and legitimizing a police state in this country—a country that has, for a long time now, committed itself to surveillance, social control, force, projection, arbitrary violence, and terror."

Via Paris Marx: "Getting off US tech: a guide"

Anthropic are not "the good guys." "Leaked Slack Messages Show CEO of "Ethical AI" Startup Anthropic Saying It's Okay to Benefit Dictators."

Eugenics. That is the master plan. "Inside the Silicon Valley push to breed super-babies."

"Fascism for First Time Founders" – Mike Masnick has some tips n' tricks.

You know who the real victims are here (according to this Microsoft employee): the people who use AI: "AI Could Have Written This: Birth of a Classist Slur in Knowledge Work." (Echoes of an earlier piece I wrote on how the "shaming" framework is invoked by powerful people to excuse their anti-social behavior.)

"Silicon Valley AI Startups Are Embracing China's Controversial '996' Work Schedule" – that is, tech companies are demanding employees commit to a 72-hour work week. And golly gee, I'd heard "AI" was going to bring about "full luxury communism."

Thanks for reading Second Breakfast. Please consider becoming a paid subscriber. Your support enables me to do this work.