When Knowledge is Dangerous, but Information is Power

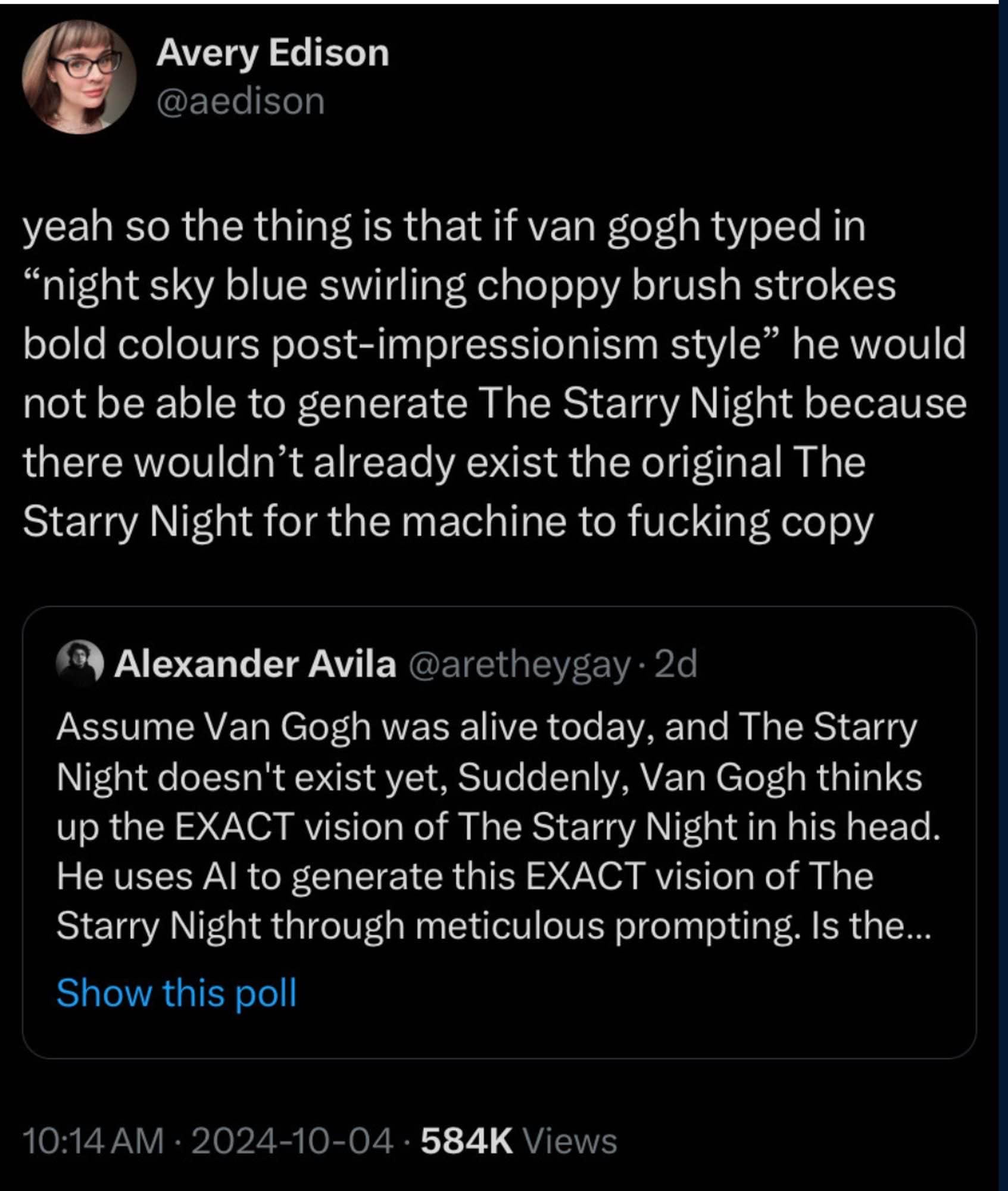

Tressie McMillan Cottom delivered an excellent "mini lecture" on TikTok this week about AI, politics, and inequality. In it, she draws on Daniel Greene's book The Promise of Access: Technology, Inequality, and the Political Economy of Hope: his idea of the "access doctrine" that posits that in a time of economic inequality, the "solution" is more skills (versus more support). Universities, facing their own political and fiscal precarity, have leaned into this, reformulating their offerings to coincide with this framework: "learn to code" and so on. This helps explain, Tressie argues, why universities have pivoted away from banning AI because "cheating" to embracing AI because "jobs of the future." By aligning themselves culturally and strategically with AI, universities now chase a new kind of legitimacy, one that is not associated with scholarship or knowledge – not with the intelligentsia, god forbid – but with information.

The word "information" replaced "intelligence" in the early days of cybernetics, Matteo Pasquinelli argues in his book The Eye of the Master: A Social History of Artificial Intelligence. It's not that the word "intelligence" has such an awful history, of course – it's linked to standardized testing and to eugenics, of course, and we should recognize that as such AI grows out of a system predicated on ranking and bias. But information is capital. No longer can we say that "knowledge is power" – I mean, if that were the case, universities and schools wouldn't be groveling for funding from the very people who loathe these institutions.

This cybernetic shift from "intelligence" to "information" – even before all these recent arguments that we are now/suddenly overwhelmed with "information" and as such need a sense-making technology like AI to help us [eyeroll] – changes how we think about education. Students aren't even "knowledge workers" anymore. They now must become "information processors" themselves, always in competition with some gadget, some algorithm.

This shift means that educational institutions – particularly those helmed by this new managerial mindset – no longer be concerned with scholarship or knowledge reproduction. Indeed, the kind of critical thinking espoused by academia (at least in a handful of disciplines, at a handful of institutions, for a brief period following WWII) is antithetical to this larger project now of subsuming mental labor to the machine. We've known this for a while now – thanks Mario Savio – but the politics of anti-intellectualism-plus-AI makes this very, very clear.

I dunno, perhaps this explains too why everyone is so damn gung-ho about "summaries" lately – this promise that AI is going to shrink everything down to a short nugget of information. The Sam Bankman-Fried school of thought: fuck Shakespeare, fuck reading, just give me some Bayes and a bullet-pointed list.

It's more efficient, to be sure. It's also much, much safer.

Speaking of public institutions at risk, opting to embrace AI to cut costs, to perform "relevance" and "efficiency" for taxpayers: "National Archives Pushes Google Gemini AI on Employees," 404 Media reports. "Nevada Asked A.I. Which Students Need Help," The New York Times reports. Over 200,000 students were no longer deemed "at risk" and as such, schools lost a lot of funding for support services – so, this shift towards an algorithmic future is "working as intended," I guess. Student was punished for using AI, so his parents sued the school. Dan Meyer on AI's "delivery problem" in education. Obligatory ed-tech snake oil link.

AI and the future of work: "Gartner Predicts 80% of AI Workforce Will Need Upskilling by 2027." At this point, you can almost guarantee that if Gartner says it's a thing, it's not a thing. Bryan Alexander on the latest Gallup polling on who actually uses AI. Spoiler alert: hardly anyone. Not that that's going to stop AI-promoters from trying to convince schools that "prompt engineer" is the job of the future.

AI and colonialism: "The Optimus robots at Tesla’s Cybercab event were humans in disguise," The Verge reports. I mean, we can laugh. We should laugh at anything Elon-Musk-adjacent. But we should also remember that all AI is based on this sleight-of-hand when it comes to labor and the Global South – the mechanical Turk lives on, with all its imperialist implications. As Ryan Broderick writes, this is

in line with most of the supposedly revolutionary tech entering the market right now. The cashier-less restaurant is managed by a remote worker zooming in to an iPad from The Philippines. The AI models powering ChatGPT are trained by workers in Kenyabeing paid cents per hour. And TikTok’s For You page is maintained by traumatized moderators in Colombia. Which makes it all the more likely that, long before robots like Optimus are fully-autonomous, they will almost certainly be operated in the same way. By someone, somewhere, for very little money.

More made-up shit from Texas: "Austin will use AI to evaluate residential construction plans" – it's accurate three-fourths of the time apparently, so it is better than a coin-flip in deciding whether or not you get a housing permit. "The ‘No. 1’ Restaurant in Austin Is Actually AI Slop," says Grub Street.

What computers still can't do: Apple's report on LLMs isn't making AI promotors happy: "Apple Engineers Show How Flimsy AI ‘Reasoning’ Can Be." Seems that high from the Nobel Prize in Physics wears off pretty quickly. Ars Technica on the "steganographic code channel": "Invisible text that AI chatbots understand and humans can’t."

"I watched an AI collar make a dog talk, and it was unreal." You know, I bet it was.

"AI will never solve this," says Bryan Merchant, where "this" could be a number of things, honestly, including "hurricanes, tech's magnificent bribes, and climate change. Plus: The Nobel laureates of AI, OpenAI's fuzzy financial projections, and Uber's latest assault on workers."

Dario Amodei, who runs the main competitor to OpenAI, Anthropic, is out with the latest chilling manifesto from Silicon Valley: "Machines of Loving Grace." Ben Riley summarizes it: "techno-optimism as digital eugenics."

Automating harassment: "Anyone Can Turn You Into an AI Chatbot. There’s Little You Can Do to Stop Them." "AI-Powered Social Media Manipulation App Promises to 'Shape Reality'." "Millions of People Are Using Abusive AI ‘Nudify’ Bots on Telegram."

Automating death: "The Doctor Behind the ‘Suicide Pod’ Wants AI to Assist at the End of Life."

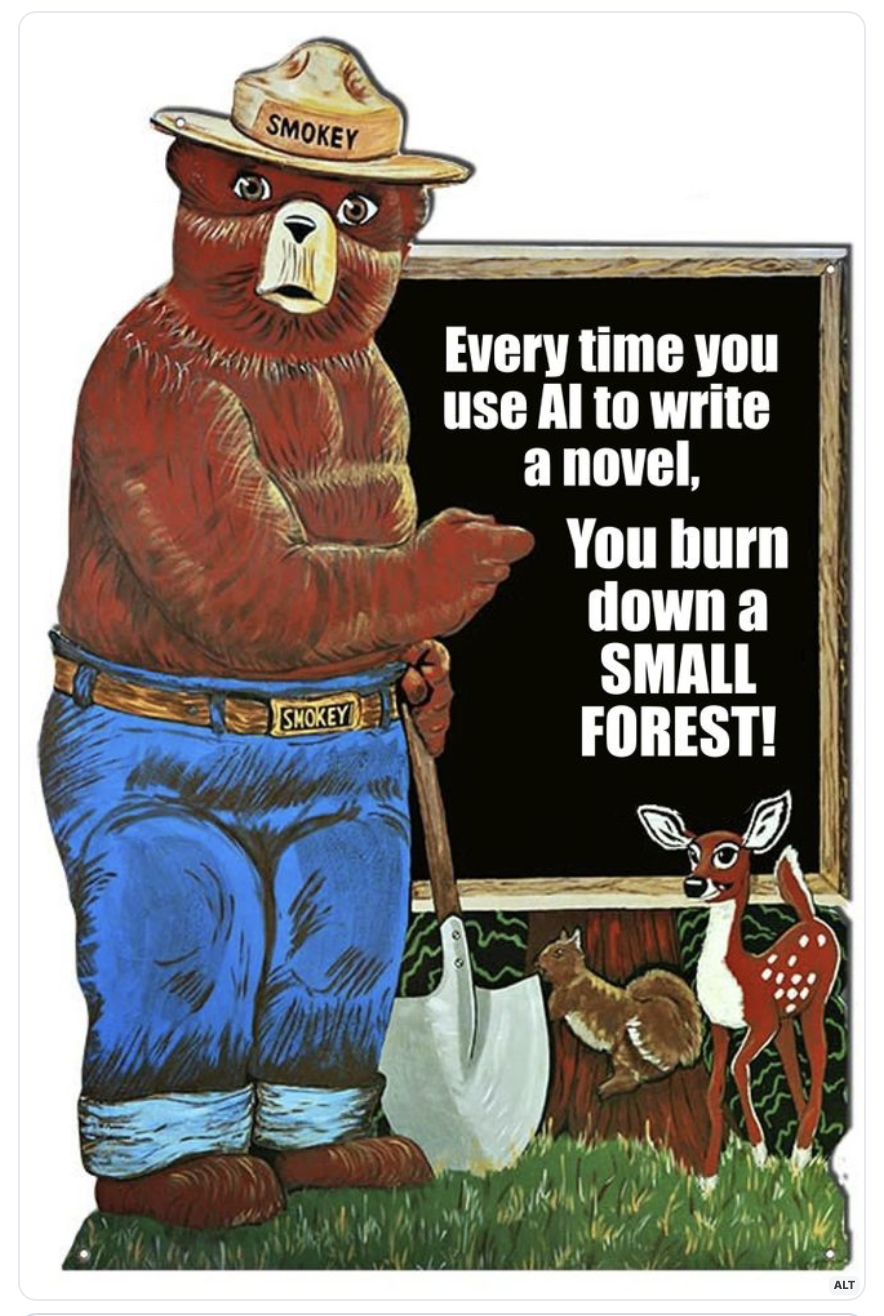

On the heels of news of Microsoft re-igniting Three Mile Island to power Clippy and other chatbots, we learned this week that Amazon has invested $500 million in its own nuclear power plants. The Guardian reports that "Google to buy nuclear power for AI datacentres in 'world first' deal." "World first" here does not mean "let's put the climate first." Oh no. To the contrary. Seems like these assholes don't even want us to get to choose whether we'd rather have generative AI or a future for the planet.

Thanks for subscribing to Second Breakfast. Paid subscribers will hear from me on Monday, with a summary on what I'm reading and thinking about for The Book project.